Wireless

An Inside Look: Creating Seamless Networks with David Debrecht

Key Points

- CableLabs works with its member operators and the vendor community to advance wireless network technologies and enable high-performing wireless connections.

What will it take to achieve adaptive, seamless connectivity across networks? CableLabs is leading the charge to reach this goal through new technology development, technology specifications and standards, and working groups that promote industry alignment.

In a new “Inside Look” video, David Debrecht, vice president of Wireless Technologies, talks about how the CableLabs Technology Vision seeks to drive industry alignment and interoperability across networks.

“The Tech Vision brings the industry together and defines where we're going. It gives us targets in technology and network usage so we can develop strategies to meet network needs of the future,” Debrecht said.

As the industry transitions from the speed era to the adaptive era, one of the key focus areas in the Technology Vision is Fixed-Mobile Convergence & Wireless. Together with operators and vendors, we are working to provide stable, interoperable wireless connections that deliver a consistent connectivity experience for the user.

Check out the video to learn how we’re bringing industry stakeholders together with CableLabs working groups that help us get more done, share ideas and innovation and create viable solutions that move us closer to seamless connectivity.

“I would definitely encourage people to get involved in the working groups that we have set up,” Debrecht said.

Join us!

Events

Get Connected, Get Inspired, Get Ready… for SCTE TechExpo 2024

Key Points

- SCTE TechExpo 2024 — the Americas’ largest broadband event — takes place Sept. 24–26 in Atlanta.

- CableLabs operator members receive complimentary Full Access passes, giving you entry to all sessions and a lounge.

Get ready for a broadband event like no other! Each year, SCTE TechExpo sets the agenda for what’s next in broadband technology with a packed three-day agenda including a line-up of inspiring headliners and technical speakers, thousands of influential global attendees to connect with and the latest technology to explore.

Practical Insights to Inspire Your Innovation Agenda

Nearly 100 hours of content spread across nine dedicated conference tracks will ensure you get the most out of the conference, on the topics that matter to you. Here are some highlights of CableLabs sessions that you won’t want to miss:

- The Opening Headliners kick off the conference on Tuesday, Sept. 24. CableLabs’ CEO Phil McKinney joins executives from Cable One, Cox Communications, Liberty Latin America and SCTE to set a bold vision for the future of broadband.

- Artificial intelligence (AI) is all around us so it’s no surprise it features heavily in the agenda. Catch CableLabs sessions on Making the Most of AI in Broadband Networks with Karthik Sundaresan, distinguished technologist and director of hybrid fiber coax (HFC) solutions at CableLabs, along with AWS, Cox and Rogers; and Driving a Proactive Approach to AI-Powered Security with Dr. Kyle Haefner, Ph.D., principal architect at CableLabs, and Charter Communications.

- Shining the spotlight on Wireless & Convergence, John Bahr, a CableLabs distinguished technologist, joins Cox, Nokia and Rogers to uncover Seamless Connectivity: Anytime, Anyplace, Anywhere, and CableLabs’ Dr. Roy Sun, Ph.D., principal architect, and Dorin Viorel, distinguished technologist, tell you Everything You Ever Wanted to Know About Fixed Wireless Access (FWA)… but Were Afraid to Ask!

- NaaS is where it's at! Hear how to grow revenues by fostering a new approach to connectivity in the API-Powered NaaS session with CableLabs’ Shafi Khan, lead software engineer, along with Charter, Comcast and Izzi.

- Hear how to leverage cost savings with the latest technology with Charter, Comcast and Teleste in The What, Why and How of Using Digital Twins, moderated by Greg White, distinguished technologist, also of CableLabs.

- Technology policy takes center stage in A Year After the Artificial Intelligence Executive Order: Current Status & What’s Ahead as Priya Shrinivasan, CableLabs’ director of Technology Policy, and a panel of government experts lift the lid on what President Joe Biden’s executive order on AI means for the broadband industry.

- In CMO Insights: The North American Perspective, hear how the industry is meeting the demands of new users and building marketing strategies to suit a changing audience.

- In Growth & Transformation—Lessons from the Video Game Industry, Anju Ahuja, CableLabs’ vice president of Product Strategy Insights, talks with Sony Interactive Entertainment about what can be learned from the gaming industry on competitive differentiation, launching new products and entering new markets.

- Lastly, don’t miss the CableLabs-hosted Masterclass: Cultivating Your Ideation Toolkit and Innovation Mindset, on Monday, Sept. 23, as part of SCTE TechExpo’s newly launched Human Factor track.

Explore the detailed agenda here.

Stay Informed, Get Connected

SCTE TechExpo is a unique opportunity to fuel innovation, discover the latest trends and advancements in broadband technology, and explore new opportunities for growth and collaboration with peers who share a passion for the industry.

CableLabs operator members receive complimentary Full Access passes, which include entry to all sessions and a lounge for meetings and networking, away from the buzz of the main conference.

Plus, visit us on the show floor at booth #1547 to learn more about CableLabs’ Technology Vision and the benefits of CableLabs and SCTE membership. In our booth theater, sit in on demos covering topics ranging from AI and security to SCTE chapters and standards.

Be part of the community shaping the future of connectivity, and register today!

Technology Vision

Shaping the Future: Developing a Healthy Ecosystem That Drives Technology Innovation

Key Points

- CableLabs invites our members, the vendor community and other industry stakeholders to join us in working to achieve the three pillars of our Technology Vision: seamless connectivity, network platform evolution and pervasive intelligence, security and privacy.

- Working groups bring members and others together to focus on industry challenges, priorities and technologies.

- We regularly host interoperability events to enable participants to work together on specific solutions and goals.

The future of broadband hinges on seamless, adaptive connectivity. CableLabs’ Technology Vision establishes the framework that will get us there by driving innovation and collaboration among our members, industry vendors and other stakeholders.

As we take the next step toward a future of context-aware connectivity and adaptive networks, the industry needs a healthy, collaborative ecosystem built on a shared vision of interoperability.

What will that look like? It starts with working together toward common goals.

What Should a Healthy Ecosystem Look Like?

As technology has evolved, user experience has become the most important driver of customer satisfaction. Users want networks that work effortlessly, anywhere and all the time. To achieve this vision of seamless connectivity, industry stakeholders must work together to:

Drive and Develop New Technologies

The technology to support seamless user experiences is still being developed, and there is still much work to be done in areas like individual network performance, optimal hand-offs between networks, responsive self-configuration and pervasive sensing. The industry still faces complex challenges in these areas, and these issues must be solved before we can realize the promise of true interoperability and seamless connectivity.

For example, one of the most exciting technologies emerging today is Network as a Service (NaaS), a solution that creates consistency across operators using a common set of APIs. At this year’s MWC Barcelona, we saw many examples of NaaS including the CableLabs demonstration of potential use cases.

With NaaS still in its early stages, CableLabs seeks to leverage working groups and collaborative projects to build on these developments to achieve the goal of unconstrained, intelligent networks that fade into the background of the user experience.

Build a Robust, Reliable Infrastructure

Seamless connectivity requires an extensive infrastructure that can maintain optimal network performance no matter where a user is. For example, coherent passive optical network (CPON), DOCSIS, 5G and Wi-Fi 6 and 7 technologies have all made significant progress, empowering faster speeds, lower latency and better reliability. However, advancements are still needed in areas like fixed network architectures, optics solutions, network telemetry and automation.

Encourage Collaboration to Ensure Alignment, Interoperability and Scalability

The new era of intelligent, adaptive networks and seamless user experiences will only be possible if industry stakeholders work together to solve key challenges. Collaboration lies at the heart of CableLabs’ Technology Vision with a focus on seamless connectivity, network platform evolution and pervasive intelligence, security and privacy. Our working groups and collaborative projects bring industry stakeholders together to address these challenges, ensure alignment, and create scalable, interoperable solutions.

Agree on Standards to Facilitate Ecosystem Integration

Interoperable devices require common standards and specifications. In cooperation with cable companies and equipment manufacturers, CableLabs works to develop publicly available specifications that help facilitate deployment of new technologies. As technology continues to evolve, the industry will need to continue operating with an agreed-upon set of standards to deliver the seamless experiences customers expect.

How Is CableLabs Working to Establish a Healthy Ecosystem?

Since its earliest days, CableLabs has remained committed to establishing long-term, cooperative technology innovation. Our founding mission was to plan and fund strategic research and development projects that require cooperative effort and to serve as a central source of information about technology development and innovation for the industry. That mission is still in place today.

The CableLabs Technology Vision builds on this rich history by defining a high-impact framework that will bring together CableLabs members, vendors, and others in the industry to advance the way we connect.

Together, we are working to improve the user experience by collaborating across key focus areas:

- Interoperability

- Fixed Network Evolution

- Advanced Optics

- Security & Privacy

- Specifications & Standards

- Network as a Service

- Fixed Mobile Convergence & Wireless

- Intelligence, Reliability & Performance

- Advanced Research

How You Can Get Involved

The future is bright for the broadband industry. We’re standing on the brink of a technology revolution that will change the way we live, work, learn and play. Here’s how you can join us in shaping the future:

CableLabs Working Groups

Working groups bring members and other stakeholders together to focus on specific industry challenges, priorities and technologies. The following working groups are accepting new participant applications:

Fixed Mobile Convergence & Wireless

- 3GPP Wi-Fi Tech (members only)

- 5G FMC Phase II (members and vendors)

- Next-Gen Wi-Fi (members only)

- Seamless Connectivity (members only)

Fixed Network Evolution

- 100G CPON (members and vendors)

- Common Provisioning and Management of PON (members and vendors)

- CPON Operator Advisory Group (members only)

- DOCSIS 4.0 ATP MAC (members and vendors)

- DOCSIS 4.0 MAC (members and vendors)

- DOCSIS 4.0 MSO (members only)

- DOCSIS 4.0 PHY (members and vendors)

- DOCSIS Technology OSS (members and vendors)

- DOCSIS Proactive Network Maintenance (members and vendors)

- Latency Measurement MSO (members only)

- Low Latency DOCSIS MSO (members only)

- Remote PHY (members and vendors)

Network as a Service (NaaS)

- NaaS (members only)

Security & Privacy

- Device Identification and Authentication (members only)

- Device Management and Identity Security (DMIS) (members only)

- DDoS Mitigation (members only)

- DOCSIS 4.0 Security (members and vendors)

- Future of Cryptography (members only)

- Gateway Device Security (GDS) (members and vendors)

- Mobile/Wireless Security (members only)

- Routing Security (members and NCTA)

- Zero Trust Infrastructure Security (members only)

Interop•Labs Events

These interoperability events provide opportunities for participants to work together on a specific technology solution or goal. Recent events have focused on DOCSIS 4.0 technology, zeroing in on areas like reliability, security and interoperability. Future events will include passive optical networking technologies and Open RAN.

Ready to take the next step? We invite you to engage with CableLabs’ Technology Vision, join a working group or attend our next Interop•Labs event. Join us in our mission to advance the way we connect!

Events

Join the Community Shaping the Future of Connectivity at SCTE TechExpo 2024

Key Points

- SCTE TechExpo, the largest broadband event in the Americas, is September 24–26, 2024, in Atlanta, Georgia.

- Broadband operators who are CableLabs members can receive complimentary full-access passes to the event.

Where will the industry be by 2030? Anticipating the future and creating a strategy to ensure resilience is challenging, with ever-increasing customer demands and new technologies continually reshaping the landscape. The next generation of connectivity must be fast, reliable, ubiquitous and inclusive.

Broadband providers are looking to deploy new technologies such as fiber and mobile services and leverage application programming interfaces (APIs), data and artificial intelligence (AI) to create smart, secure and dynamic networks that improve reliability and performance. These tools will also enable increased energy efficiency and faster launches of new services.

Setting the Stage for Industry Growth

Much more than just a conference, SCTE TechExpo is where the industry meets to spark inspiration, catalyze innovation and impact the future of broadband. TechExpo is the largest and most influential broadband event in the Americas.

The brightest minds in the industry will provide ground-breaking insights on use cases and cutting-edge innovations, organized into nine dedicated conference tracks:

- Wireline Network Evolution

- Wireless & Convergence

- AI & Automation

- Operations, Construction & Network Planning

- Network as a Service (NaaS)

- Security & Privacy

- Growth & Transformation

- Technology Policy

- The Human Factor

As well as the enriching practical knowledge to be gained from the sessions, TechExpo attendees can explore the latest innovations and technology transforming the industry in the exhibition. AI is prolific on the agenda, and a dedicated “AI Zone” will make its debut this year, with content appearing on two stages on the show floor, the Tech Talk Stage and The Loft.

CableLabs members can receive complimentary full-access TechExpo passes, which include all headliner and conference sessions as well as entrance to the exhibition and a private lounge. Members will have the opportunity to connect with other global operators, policy leaders, vendors, associations and visionaries, and collectively foster debate and dialogue around the industry’s most pressing topics.

Exhibitor and sponsorship opportunities are also still available.

Fuel the Future at SCTE TechExpo

“TechExpo will deliver an unparalleled opportunity to engage with industry CEOs, CTOs and decision-makers and to see emerging technologies and applications that will transform the industry,” says SCTE President and CEO Maria Popo. She will kick things off on the main stage, sharing the strategy and vision for SCTE.

Opening day headliners will set the bold vision to shape the world of broadband connectivity and the workforce powering it, featuring CableLabs President and CEO Phil McKinney and the co-chairs of this year’s TechExpo, Cox Communications President Mark Greatrex, and President and CEO of Liberty Latin America Balan Nair.

Appearing together to discuss workforce development, following Greatrex and Nair, will be the CEO and COO of Cable One. Rounding out the headliners on day one will be Deloitte’s global Future of Work leader, who will share her passion for making work better for humans and making humans better at work, using technology to enable and elevate human experiences, performance and outcomes.

Taking to the main stage on Wednesday, September 25, will be a CTO Town Hall set to include executives from Charter Communications, Comcast Cable and Cox Communications. Day two will also feature a Chief Strategy Officer panel featuring Liberty Global, Liberty Latin America and Rogers Communications executives.

Where Ideas Become the Blueprint for the Future

For over 40 years, SCTE TechExpo has been bringing the broadband community together to share, inspire and innovate. Join thousands of global attendees at TechExpo in Atlanta, Georgia, September 24–26, 2024, to discover cutting-edge innovation and experience thought leadership that unlocks new possibilities, ignites change and energizes the industry around a shared vision of advancing the way we connect the world.

Technology Vision

An Inside Look: Revolutionizing Connectivity with Dr. Curtis Knittle

Key Points

- Explore the breadth of innovation taking place in our Wired and Optical Center of Excellence labs — from DOCSIS®️ technologies to passive optical networking and advanced optics.

CableLabs is continuing its march toward achieving ubiquitous, context-aware connectivity that seamlessly integrates into our daily lives. With ever-increasing demands for intelligent and adaptable networks, CableLabs and our member and vendor community are at the forefront of this transformation for the broadband industry.

One way we’re helping drive the future of broadband beyond speed and toward greater reliability is by prioritizing advancements in fiber to the premises (FTTP), DOCSIS technologies, hybrid fiber coax (HFC), passive optical networking, advanced optics and other wireline technologies.

In a new “Inside Look” video from CableLabs, Dr. Curtis Knittle, VP of Wired Technologies, walks through his group’s work to advance CableLabs’ Technology Vision. Focusing on four focus areas outlined within the Tech Vision — Interops & Vendor Ecosystem, Fixed Network Evolution, Specifications & Standards and Advanced Optics — the Wired Technologies team is developing solutions to deliver lightning-fast, symmetrical speeds and increased reliability.

Check out the short video above to learn more about their projects, our state-of-the-art labs and how our members — along with the vendor community — can collaborate with us in this transformative roadmap for the broadband industry.

“It’s their opportunity to define what they believe they will need in the future for their networks,” Knittle said.

Join us!

Events

Stronger Together: Connect at CableLabs ENGAGE Community Forum

Key Points

- ENGAGE, a virtual CableLabs forum, is exclusively for our NDA vendor community and member operators to learn about the latest CableLabs technologies and how to get involved.

- The free event will cover CableLabs' Technology Vision, how generative artificial intelligence can address industry challenges, standards organization involvement, sustainability and more.

Are you a senior technologist, product strategist or involved in planning your company’s technology roadmap? CableLabs’ exclusive ENGAGE virtual forum gathers our NDA vendor community and member operators to hear more about the latest technology developments at CableLabs.

Register now to join us at ENGAGE from 9 am to noon MDT on Wednesday, Aug. 7.

Building a Healthier Ecosystem

As an attendee, you’ll learn how you can more effectively engage with us on CableLabs projects and initiatives, and you’ll take away practical insights to help tailor your own innovation agenda. Together, we can shape the future of the industry.

CableLabs’ recently unveiled Technology Vision is a cornerstone for industry transformation, targeting ubiquitous, context-aware connectivity and an adaptive, intelligent network. It serves as a roadmap for advancing innovation and technology development in the coming years, fostering alignment and supporting unmatched scale for the industry — all to create a healthier, more competitive ecosystem for CableLabs’ operator members and the vendor community.

At the heart of all that we do at CableLabs is collaboration, and our Technology Vision relies on the combined strength and input of our members, the vendor community and other industry stakeholders. At ENGAGE, you'll hear more about how the framework can be leveraged — at an individual, company and industry level — to unlock new opportunities for better, more seamless online experiences and to fuel innovation at scale.

To support our vision, we continue to define standards and specifications to ensure greater compatibility, interoperability and progress for all of us in the ecosystem. Join us at ENGAGE to learn how we can create impact together.

What’s on the ENGAGE Agenda?

Throughout this morning of thoughtfully curated sessions, you will:

- Hear more about CableLabs’ activities in global standards organizations on behalf of the industry.

- Explore how the industry is responding to important and emerging areas, including sustainability/green initiatives and AI.

- Discover new working groups and initiatives that you can contribute to.

- Learn how engaging with CableLabs’ subsidiaries Kyrio and SCTE can drive success for your teams.

You won’t want to miss this opportunity to learn how to join CableLabs and our community of members and vendors on this exciting journey to shape the future of connectivity. Register now for your complimentary pass to this exclusive, virtual event.

Reliability

Reliability Is the Next Step on the Road to Intelligent, Adaptive Networks

Key Points

- Reliability is the new standard for user experiences as we work toward an adaptive era of seamless connectivity.

- DOCSIS®️ 4.0 technology moves us closer to true seamless connectivity and interoperability with technologies capable of higher throughput, lower latency and greater network cohesion.

- As part of our Technology Vision, CableLabs is spearheading technology innovation in areas like proactive network maintenance (PNM), network and service reliability, and optical operations and maintenance.

Reliability is the new standard for customer experiences, outpacing speed as the metric users care about most. According to 2023 consumer research, reliable broadband is the second most important consideration for people considering a home purchase, and a study by CyLab at Carnegie Mellon University found that users want to know how their broadband will perform in less-than-ideal conditions as well as in typical scenarios.

The growing demand for uninterrupted connectivity correlates directly with the proliferation of IoT devices and online activity. As people spend more of their time online for work, social interaction and entertainment, broadband reliability is critical to delivering quality experiences in each of those arenas.

As part of CableLabs’ Technology Vision, we’re fostering collaboration with CableLabs members operators and the vendor community across the industry as we work toward a new era of seamless network reliability. This era will be defined by ubiquitous, context-aware connectivity and intelligent, adaptive networks.

What Do We Mean by Broadband Reliability?

The term “reliability” means different things in different contexts. In terms of broadband, the term encompasses latency, connectivity, availability and resilience. Each new version of DOCSIS® technology has moved us closer to these goals.

With the introduction of DOCSIS 4.0 technology, we’re moving closer to true interoperability with technologies capable of faster speeds, higher throughput, lower latency and greater overall network cohesion. All this assures a more reliable broadband experience!

At CableLabs, we embrace all these concepts and we’re seeking to move the needle on both network reliability and service reliability:

- Network Reliability — The impact of failure — both financially and in terms of user experience — increases exponentially as user activity and network capacity increase. Network reliability aims to reduce the possibility of failure by creating a network that is resilient, available, repairable and maintainable. A reliable network also includes robust redundancy to ensure performance and continuity.

- Service Reliability — From a user perspective, reliability refers to the probability of not experiencing a service disruption. In the pursuit of seamless connectivity, the goal is to create always-on user experiences in which users can transition between networks without experiencing disruption.

Defining a Roadmap Toward Broadband Reliability

Reliability in the broadband industry depends on cultivating a deep understanding of the network’s physical layer while maintaining a digital mindset. Our mission at CableLabs is to advance the way we connect, and we’re continuously working to develop innovative technology applications aimed at achieving reliability goals and improving performance.

These efforts include helping the industry embrace and connect the concepts of broadband reliability and customer experience, working together to establish interoperability and determining what evolving broadband technology means for users at the ground level.

Here are some of the projects we’re most excited about:

Proactive Network Maintenance (PNM)

PNM has transformed the way operators use DOCSIS technology. Its advanced analytics find and correct impairments in the network that, left untreated, will eventually impact service. PNM can help hybrid fiber coax and fiber to the home networks be equally reliable, as PNM ensures dependable connectivity by addressing problems proactively before they lead to service impacts.

PNM innovations will ultimately change the way network operators work with DOCSIS technologies. CableLabs currently has several active PNM workstreams that are seeking to manage the resiliency capacity in the DOCSIS technology network so that users are never impacted by degradation.

With a wealth of network query and test capabilities, we continue to learn how to take full advantage of the information available to us. Development of the science behind operating DOCSIS networks is an important, ongoing effort. Carrying the knowledge into network operations is just as important.

An example of a related technology we’re developing is Generative AI (GAI) tools for network operations. As we learn more about the network and how to maintain it effectively, we need to put that knowledge to use. Through our research and development efforts, we’ve built solutions that turn knowledge into operations.

Quality by Design (QbD)

Another innovation that allows us to take advantage of such information is QbD. QbD tackles network reliability issues head-on by providing network operators direct visibility into the user experience.

The QbD concept, part of CableLabs’ Network as a Service (NaaS) technology, leverages applications’ APIs as network monitoring tools. By enabling applications to share real-time metrics that can be correlated with network performance, QbD allows operators to identify potential network issues and provide automated solutions.

Network and Service Reliability

This working group focuses on aligning service and network reliability and enabling operators to systematize tasks, standardize operations and ensure reliability. A 2022 paper outlines a roadmap to help operators get started on their journey toward addressing network and service reliability.

This working group has extended the roadmap into a more comprehensive set of practices that operators can implement then share their knowledge to develop efficient management of network and service reliability. Through a forthcoming SCTE publication, the working group will be defining practices that help operators ensure broadband networks and services are reliable and continue to improve. As networks transition to new technologies and services expand in their use of communications, this working group will continue to assure that reliable networks and services are built within.

Optical Operations and Maintenance

The optical operations and maintenance working group is focused on aligning KPIs and streamlining operations to simplify the maintenance of optical networks. Participation has been strong, and the group already has agreement on a base architecture. The next step is to identify key telemetry and build solutions that support specific use cases.

Through simplification, we can improve interoperability tools, get new technologies to market faster and streamline networks overall. Overarching goals include reducing complexity, ensuring consistency and improving broadband reliability.

Tools and Readiness

This effort is focused on helping operators plan for DOCSIS 4.0 deployment, avoiding issues that may otherwise occur and improving the reliability of DOCSIS 4.0 technologies. Along with industry partners, CableLabs has released specifications for cable operator preparations for DOCSIS 4.0 technology. SCTE Network Operations Subcommittee Working Group 5 is nearly ready to extend this work into a best practices document that guides operators in preparing to deploy DOCSIS 4.0 technology.

Where Are We Headed?

In the next era of broadband, reliability will improve to the point that networks fade into the background of the user experience.

To achieve this, CableLabs’ Technology Vision defines a framework centered on three key themes: Seamless Connectivity; Network Platform Evolution; and Pervasive Intelligence, Security and Privacy. Within those themes, CableLabs is working with world-class experts to deliver flexible, reliable network solutions that can enable new services and improve user experiences.

How to Get Involved

CableLabs members have several opportunities to collaborate in cultivating the future of broadband technology. Consider attending our next Interop·Labs event, which will include PNM testing of DOCSIS 4.0 cable modems. Or join a working group to help move the needle on finding solutions for industry challenges.

We also invite you to learn more about our Technology Vision themes and focus areas. As we work toward the goal of network reliability and seamless connectivity, industry partnerships and collaboration will unlock the door to the next stage of broadband innovation.

Technology Vision

Beyond Speed: The New Eras of Broadband Innovation

Key Points

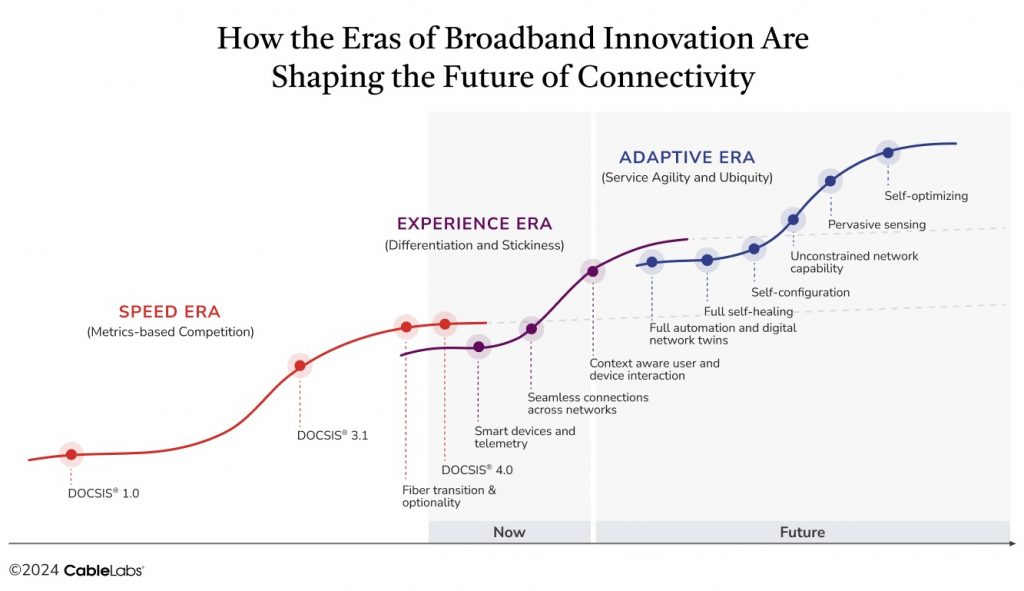

- CableLabs’ Technology Vision outlines three eras of broadband innovation: speed, experience and adaptivity.

- Tailored, seamless and adaptive experiences of the future will require industry collaboration and ecosystem interoperability.

Still trying to attract customers based on ever-increasing gigabit speeds? That may not be enough to gain a competitive advantage in today’s broadband industry. We’re entering a new era — one in which the market prioritizes seamless connectivity over technical metrics.

Users want technology that will work everywhere all the time, with no disruptions. It doesn’t matter to them if an operator can deliver 100 gigabits per second if their connection drops at a pivotal moment.

The broadband industry has reached a tipping point at which additional gains in speed no longer offer substantial improvements to customer experiences that they used to. As quality of service (QoS) has improved over time, user expectations have also evolved. Users spend more and more time online, and they want higher-quality experiences and predictable delivery that won’t glitch or drop as they go about their day, whether they’re at home, on the go or anywhere in between.

In other words, users don’t want to think about their networks. They just want them to work, wherever they happen to be.

To achieve this outcome, the industry needs a network that can anticipate and adapt to users’ needs in real time as they move between networks and applications. Ultimately, the goal is ubiquitous, context-aware connectivity that fades into the background.

How do we get there? First, let’s take a look at the three eras of broadband innovation outlined in CableLabs’ Technology Vision: speed, experience and adaptivity.

Where We Started: The Speed Era

The early days of broadband innovation focused on metrics. Speed was the moving target for DOCSIS®️ 1.0 and DOCSIS 3.1 technologies, and the aim was to improve metrics that were visible to consumers. Companies touted faster and faster speeds as a competitive differentiator, and today we can deliver 10 gigabits per second, with even greater speed increases on the horizon.

While it is foundational to the future of the industry, speed has reached a saturation point. It has ceded its place as the primary driver of usage and is now an expectation, not a differentiator. Faster speeds are certainly likely in the future, but the focus has shifted to delivering explicit value in terms of experience.

Where We Are: The Experience Era

Broadband usage has reached an inflection point, with users requiring such services in nearly every aspect of their lives. They expect their networks to be not only reliable — and reliably fast — but also to transform the way they live, work, learn and play by enabling optimal experiences throughout all of their online interactions. Seamless connectivity has become the new target for the online experience.

Realizing these customer expectations will require advances in smart devices and telemetry, seamless connections across networks, and context-aware user and device interactions. Operators will also need to have a comprehensive understanding of network performance and how it impacts application experiences.

DOCSIS 4.0 technology moves us closer to seamless user experiences with its capability for delivering symmetrical multi-gigabit speeds while supporting high reliability, high security and low latency. Although network technology innovations have expanded user experience measures beyond speed, true seamless connectivity remains elusive.

There’s no one-size-fits-all solution to the seamless connectivity problem. In addition to the performance of their individual networks, providers must also consider:

- How to optimize the hand-off as customers move from one network to another

- How various user applications and devices impact the user experience

- How to meet the increasing demand for tailored experiences that anticipate needs before a disruption occurs

- How to deliver uninterrupted experiences as users move through various environments

Online experiences in an environment such as a hotel, for example, are currently dependent on that hotel’s Wi-Fi. This means the connection could be glitchy or service may be disrupted, leaving customers frustrated and their expectations unmet. The goal in the experience era is to ensure a Wi-Fi experience that matches those expectations no matter where the user is.

The problem is that measuring a user’s actual experience isn’t easy. We have limited intelligent network metrics today that help us predict what the experience will be, and there’s still significant work to be done in this area.

Still, we’ve seen some promising developments in correlating metrics with experiential outcomes.

In recent work conducted by CableLabs, we’ve explored the potential of correlating application Key Performance Indicators (KPIs) to network KPIs. Through our work on Quality by Design (QbD), we can leverage the customer’s lived application experience to identify network impairments and take action on those issues before the user’s experience degrades. This could alleviate frustrating customer experiences and eliminate many unnecessary customer service interactions that result from not understanding the user’s experience in real time.

Where We’re Headed: The Adaptive Era

Ultimately, the emphasis on user experience will segue into an adaptive era that leverages self-configuration, sensing components for understanding environmental factors and predictive algorithms that anticipate future events to ensure uninterrupted experiences. Networks of the future will be smarter, more capable and less visible, essentially fading into the background.

To achieve this result, industry stakeholders will need to work together toward continued increases in capacity, significant enhancements in reliability and network intelligence, and increased network understanding to facilitate proactive responses. Focus areas in the adaptive era will include:

- Full Automation and Digital Network Twins — As more smart components are built into technology, we’ll gain access to telemetry that isn’t on the network today. The data will help us understand network performance in greater detail and build automation into the network.

- Full Self-Healing — The network will identify failures or weak points and automatically re-route data or allocate resources to maintain functionality.

- Self-Configuration — We have some self-configuration technology today, but it’s still fairly primitive. In the adaptive era, learning algorithms will be built in, fully responsive and able to configure themselves to the environment and the way users interact.

- Unconstrained Network Capabilities — Customers will be able to access their services from anywhere with Network as a Service (NaaS). Achieving this goal will require partnerships across providers and networks to create common understanding.

- Pervasive Sensing — The ability to sense fiber components, Wi-Fi, construction and other events will empower predictive adaptability and proactive responses.

- Self-Optimizing — By constantly analyzing performance metrics and user requirements, networks of the future will be able to optimize resource allocation, routing paths and other parameters for best performance.

The infrastructure to create these kinds of experiences is still in the early stages of development. However, we’re starting to see broadband innovations that incorporate adaptive elements. Examples include:

- Automated Profile Management Changes — Built-in mechanisms detect a problem in a section of the network and shift the traffic to a different range inside that frequency.

- Smart Home Technology — Smart home devices, such as thermostats and lighting systems, use sensors to understand environmental factors. They can then adjust settings accordingly, providing a more comfortable and energy-efficient living space.

- Environmental Sensing — Using various sensors and algorithms, networks can sense environmental events (e.g., seismic activity, nearby construction, etc.) and alert first responders or respond to avoid an outage.

What’s Next for the Industry?

Delivering true seamless connectivity — and enabling truly optimal application experiences — won’t happen overnight. To get there, we’ll need to work together as an industry to solve complex system-level issues and develop common platforms for interoperability.

As a whole, the industry must transition from its focus on improving network metrics such as speed. Operators must prioritize delivering tailored, seamless and adaptive experiences built on interoperability. Doing so will require common interfaces to support interoperability, integrated definitions and data structures, as well as collaboration across providers and networks.

The CableLabs Technology Vision seeks to move the industry forward by helping our members provide superior customer experiences everywhere and aligning the industry on a concise model for building adaptive networks through:

- Seamless Connectivity

- Network Platform Evolution

- Pervasive Intelligence, Security and Privacy

To design and implement the technologies and operational models of the future, operators will need to develop a common understanding that transforms the vision of the adaptive era into a reality for every customer. Together, we can deliver network solutions that are flexible, reliable and capable of enabling new services and improved user experiences.

Technology Vision

An Inside Look at CableLabs’ New Technology Vision

Key Points

- The Tech Vision aims to drive alignment and scale for the broadband industry and to foster a healthy vendor ecosystem.

- A framework of core areas focuses on defining network capabilities that are flexible, reliable and can deliver quality services and user experiences.

Last month at CableLabs Winter Conference, we unveiled a new way forward for CableLabs’ technology strategy — a transformative roadmap designed to support our member and vendor community through innovation and technology development in the coming years.

Mark Bridges, CableLabs’ Senior Vice President and Chief Technical Officer, recently sat down with David Debrecht, Vice President of Wireless Technologies, to talk more about this new Technology Vision. Check out the video below to learn more about the strategy and how it evolved from conversations with our member CTOs about what’s important to them.

Thinking About Connectivity Differently

The Technology Vision serves as a framework to help align the industry around key industry issues for CableLabs members and operators. By leveraging what CableLabs does best, we can help provide alignment and scale for the industry and direct our efforts toward developing a healthy vendor ecosystem. Involving our members from the get-go has allowed us to refine the Tech Vision framework to focus on areas that will have the most impact for them and give them a competitive edge.

“It’s all about connectivity,” Bridges explains. “People don’t care how they’re connecting. They don’t think about the access network. They don’t think about the underlying technologies like we do. We’re best positioned to be that first point of connection for people.”

The CableLabs Technology Vision defines three future-focused themes — Seamless Connectivity, Network Platform Evolution and Pervasive Intelligence, Security & Privacy — that encompass the scope of broadband technologies. The goal is to provide a concise model for building adaptive networks and set up a framework of core areas focused on defining network capabilities that are flexible, reliable and able to deliver quality services and user experiences.

In the video, Bridges highlights some of the initiatives CableLabs is already deeply involved in to support this vision, including Network as a Service (NaaS), fiber services and mobile innovations designed to move us closer to seamless connectivity. These areas are mainstays in CableLabs’ technology portfolio, allowing us to drive innovation and advancement while keeping costs low.

Creating an Adaptive Network

What’s ahead for the industry in the near future? Bridges points to a “convergence of connectivity” that enables operators to offer seamless services across different network and device types.

As AI evolves and more intelligent devices enter the market, we’re already seeing increases in network usage. These innovations raise critical questions as we look to the future: How will traffic utilization patterns change? What impacts will we see for reliability and capacity needs?

Bridges envisions a network that is more reliable, more capable and, in a sense, less visible. It’s a network, he says, that “fades into the background. You connect and do the things that you want to do and you don’t have to think about it.”

Do you want to be a part of shaping that future? Our working groups bring CableLabs members and vendors together to make meaningful contributions that advance the industry as a whole. Reach out to us to learn how you can participate in this work and help make an impact.

Events

CableLabs Winter Conference: Powering Connections and Collaboration This Month in Orlando

Key Points

- Winter Conference is designed for CableLabs members and the NDA vendor community to expand their knowledge, build meaningful connections and discover the technology advancements transforming the industry.

- Sessions will cover topics including AI, Wi-Fi, seamless connectivity, optics and DOCSIS® technology — as well as an all-new Technology Vision for the industry.

Are you ready to maximize your connections and unlock opportunities for collaboration? Join us at CableLabs Winter Conference — an exclusive event bringing together industry experts and thought leaders to foster progressive dialogue and help shape the future of broadband.

This private, intimate gathering is a unique opportunity for our members and the NDA vendor community to expand knowledge, network with peers and discover the technology advancements transforming the industry.

CableLabs’ much anticipated and most requested event is back in Orlando, Florida, from March 25–28, 2024. Registration is open for members through March 20, and on-site registration will be available at the conference.

Voices of the Industry

Craig Moffett, industry analyst and Senior Managing Director of MoffettNathanson, will set the scene with his perspective on the current state of the industry as well as emerging trends and opportunities.

Later, industry executives Ron McKenzie of Rogers Communications, Elad Nafshi of Comcast Cable and Gary Koerper of Charter Communications will discuss the industry’s new and evolving Technology Vision in a strategic, forward-thinking session led by CableLabs’ SVP and CTO, Mark Bridges. This new vision will foster more robust, efficient and sustainable industry ecosystems.

Among the other featured Winter Conference speakers are Victor Esposito, CTO at Ritter Communications, who will discuss FTTH and PON strategies, and Dan Rice, Vice President of Access Network Engineering at Comcast Communications. He will participate in a panel exploring the most pressing topics around network platform evolution.

A Spotlight on Strategy

A series of all-new strategy sessions are planned for members to exchange ideas and practical insights on Thursday, March 28. A Broadband Usage and Demand Forecasting session, which will include breakfast that morning, will kick off the day — covering the evolution of network demand and its impact on future technology and capacity planning.

The breakfast will be followed by deep-dive sessions on seamless connectivity strategies using mmWave spectrum and Low-Earth-Orbit Satellite technologies, and the market dynamics and economics of FWA and FTTH. Members must be registered for Winter Conference to attend these strategy sessions.

Captivating Content

Winter Conference is packed with insights and inspiration. Just some of the additional conference session topics include:

AI and Autonomous Networks

- Learn about the transformative effects of ongoing AI endeavors and discuss how the industry can unite to create a more interconnected and intelligent network ecosystem.

FTTH and PON

- Discuss challenges to FTTH deployment, accelerating network deployment, and the modernization and virtualization of PON.

- Explore the future of PON — including emerging technologies such as 25G, 50G and 100G PON, quality-based access networks, seamless connectivity and reliability.

Seamless Connectivity

- Get a Wall Street expert’s view on how telcos are positioning themselves for seamless convergence.

- Explore the growth drivers and early use cases of Global Developer Services.

Wi-Fi

- Hear about the challenges of supporting the in-home Wi-Fi experience and strategies needed for quick and effective resolution of issues.

- Explore the strategic role of Wi-Fi in ensuring a high-quality, seamless in-home broadband experience, and gain insights into the latest developments.

And that’s not all! There’s so much more to discover and learn at Winter Conference.

Smaller Market Conference

At the Smaller Market Conference on Monday, March 25, small and mid-tier operators will have the opportunity to discuss the issues that are most important to them and their teams. This event is available for CableLabs members and NCTA guests. Registration for Smaller Market Conference is separate from Winter Conference, so please be sure to register for both if you are planning to attend.

Experience Winter Conference

Don’t miss the chance to take part in one of the highlights of the CableLabs events calendar. Expand your knowledge, build meaningful connections and stay at the forefront of industry advancements.

Visit the event website to register and plan your trip to CableLabs Winter Conference today.