Video

Cable Operators Harmonize Around HDR10 for High Dynamic Range Video

Cable ready to deploy both on-demand and live HDR services

High dynamic range, or HDR, marks the latest in a series of enhancements to dramatically improve the quality of video delivered either live, or on demand, within the cable industry. And, with a proverbial sigh-of-relief, cable operators have recently harmonized around HDR10 as a starting point to begin deploying these services, leaving room for further optimized solutions going forward.

Crawl, walk, run.

Think of it as having reached the first stage in a “crawl, walk, run” approach.

HDR is grounded by a simple design objective; i.e. make the darkest parts of the video seem even darker while making the brightest parts seem even brighter. Everything else in the video should scale accordingly.

For the mathematician, this is a simple problem, but for the cable operators, not so much.

More than one way to skin the cat

It turns out that there is a cornucopia of possible solutions to implement HDR. Hence, choosing which one to deploy requires the equivalent of navigating a complex neural network of solution pros and cons that would put to shame even the smartest artificial intelligence algorithm. Then, following that decision-making process, which could result in different answers for each operator, there is the issue of finding content producers that happen to be producing the content in a way to leverage the chosen path. All of this to achieve the goal of delivering high-quality content that will appear consistently regardless of whether it is delivered live or on-demand, or whether it is being viewed on any one of a plethora of devices, including television sets, readers, laptops, phones, and tablets.

But, why so difficult?

Solutions to implement HDR vary, depending on the camera settings used to capture the video, and the settings for the types of displays used to consume the video. Further complicating the issue for cable operators is that the on-demand video workflow is not always identical to the live production video workflow. And, consequently, at any point between video production to video consumption, the video itself is relayed across a network of devices that may not be completely tuned for the chosen solution. To put it concretely, the more complicated the HDR solution is, the easier it is for the video to get mangled in the network.

Making matters even worse

Each of the solutions has at least one bonafide standard backing it from a legitimate standards-developing-organization or SDO. SDO’s that have adopted HDR standards include ATSC, ETSI, SMPTE, and MPEG.

From simple to not-so-simple solution designs, how to make HDR work?

The most straightforward way to implement an HDR workflow is to literally modify each pixel of the video with what are called electromagnetic optical transfer curves while the video is being produced. But this has the “con” that small variations in the luminance for each video stream are not necessarily and properly accounted for. Nevertheless, the results are very good. Such designs consistent with this strategy include solutions called: PQ10 and Hybrid Log Gamma (HLG).

A slightly more complex solution will build on the above PQ10 solution to include metadata to signal variations in luminance between video streams. This solution is known as HDR10.

Finally, the comparatively more difficult-to-implement solutions will signal variations between the luminance between each scene in each video stream by providing metadata for each scene prior to the scene itself. Another more difficult-to-implement solution is to encode a standard dynamic range (SDR) signal separately from a side-stream of information to enhance the signal from SDR to HDR.

So, why even bother with the more complicated solutions?

The answer is, as one might expect, that the more difficult-to-implement solutions can produce better results. The complexity for a cable operator is, however, that the additional metadata or side-stream information requires more fine-tuning of all of the underlying network components, both for live and on-demand.

Cable roadmap: start with HDR10 and then optimize

Earlier this year, CableLabs facilitated meetings of its members to study candidate roadmaps, taking into account both near and longer-term solutions for the delivery of HDR services. Following that study of all potential ways-forward, cable operators successfully developed their roadmap, which is: start with HDR10 and build further optimizations into their networks from there. The benefit includes a harmonized approach leaving room for all other solutions, including HLG, as conversion from HDR10 to HLG is a relatively simple process. Other more complex solutions can also be subsequently deployed as the corresponding network components are added to support these services. For content producers, there is the benefit of having a clearly-defined starting point for their cable network business partners.

Finally, cable operators can send the white-smoke signal; cable is ready to deliver HDR.

--

Want to learn more about HDR in the future? Make sure to subscribe to our blog by clicking below.

Video

The Near Future of AI Media

CableLabs is developing artificial intelligence (AI) technologies that are helping pave the way to an emerging paradigm of media experiences. We’re identifying architecture and compute demands that will need to be handled in order to deliver these kinds of experiences, with no buffering, over the network.

The Emerging AI Media Paradigm

Aristotle ranks story as the second-best way to arrive at empirical truth and philosophical debate as the first. Since the days of ancient oral tradition, storytelling practices have evolved, and in recent history, technology has brought story to the platforms of cinema and gaming. Although the methods to create story differ on each platform, the fact that story requires an audience has always kept it separate from the domain of Aristotle’s philosophical debate…until now.

The screen and stage are observational: they’re by nature something separate and removed from causal reality. For games, the same principle applies: like a child observing an anthill, the child can use a stick to poke and prod at the ants to observe what they will do by his interaction. But the experience remains observational, and the practices that have made gaming work until now have been built upon that principle.

To address how AI is changing story, it’s important to understand that the observational experience has been mostly removed with platforms using six degrees of freedom (6DoF). Creating VR content with observational methods is ultimately a letdown: the user is present and active within the VR system, and the observational methods don’t measure up to what the user’s freedom of movement, or agency, is calling for. It’s like putting a child in a playground with a stick to poke and prod at it.

AI story is governed by methods of experience, not observation. This paradigm shift requires looking at actual human experience, rather than the narrative techniques that have governed traditional story platforms.

Why VR Story is Failing

The HBO series, Westworld, is about an AI-driven theme park with hundreds of robot ‘Hosts’ that are indistinguishable from real people. Humans are invited to indulge their every urge, killing and desecrating the agents as they wish since none of their actions have any real-world consequence. What Westworld is missing, despite a compelling argument from its writers, is that in reality, humans are fragile, complex social beings rather than sociopaths, and when given a choice, becoming more primal and brutal is not a trajectory for a better human experience. However, what is real about Westworld, is the mythic lessons that the story handles: the seduction of technology, the risk of humans playing God, and overcoming controlling authority to name a few.

This is the same experiential principal in regards to superheroes or any kind of fictional action figure: being invincible or having superhuman powers is not a part of authentic human becoming. These devices work well for teaching about dormant psychological forces, but not for the actual assimilation of them, and this is where the confusion around VR story begins.

Producing narrative experiences for 6DoF, including social robotics, means handling real human experience, and that means understanding a person’s actual emotion, behavior, and perception. Truth is being arrived at not by observing a story, but rather, truth is being assimilated by experience.

A New Era for Story

The most famous scene in Star Wars is when Vader is revealed as Luke’s father. Luke makes the decision to commit suicide rather than join the dark side with his father. Luke miraculously survives his fall into a dark void, is rescued by Leia, and ultimately emerges anew. The audience naturally understands the evil archetype of Vader, the good archetype of Obi-Wan, and Luke’s epic sacrifice of choosing principle over self in this pivotal scene. The archetypes and the sacrifice are real, and that’s why they work for Star Wars or for any story and its characters, but Jedi’s are not real. Actually training to become a Jedi is delusional, but the archetype of a master warrior in any social domain is very real.

And this is where it gets exciting.

Story, it turns out, was not originally intended for passive entertainment, rather it was a way to guide and teach about the actual experience. The concept of archetypes goes back to Plato, but Carl Jung and Joseph Campbell made them popular, and it was Hollywood that adopted them for character development and screenwriting. According to Jung, archetypes are primordial energies of the unconscious that move into the conscious. In order for a person to grow and build a healthy psyche, he or she must assimilate the archetype that’s showing up in their experience.

The assimilation of archetypes is a real experience, and according to Campbell, the process of assimilation is when a person feels most fully alive. Movies, being observed by the audience, are like a mental exercise for how a person might go about that assimilation. If story is to scale on the platforms of VR, MR (mixed reality) and social robotics, the user must experience archetypal assimilation to a degree of reality that the flat screen cannot achieve.

But how? The scope of producing for user archetypes is massive. To really consider doing that means going full circle back to Aristotle’s philosophy and it’s offspring disciplines of psychology, theology, and the sciences - all of which have their own thought leaders, debates, and agreements on what truth by experience really means. The future paradigm of AI story, it seems, includes returning to the Socratic method of questioning, probing, and arguing in order to build critical thinking skills and self-awareness in the user. If AI can be used to achieve a greater reality in that experience, then this is the beginning of a technology that could turn computer-human interaction into an exciting adventure into the self.

You can learn more about what CableLabs is working on by watching my demonstration, in partnership with USC's Institute for Creative Technologies, on AI agents.

Eric Klassen is an Executive Producer and Innovation Project Engineer at CableLabs. You can read more about storytelling and VR in his article in Multichannel News, "Active Story: How Virtual Reality is Changing Narratives." Subscribe to our blog to keep current on our work in AI.

Video

W3C Recommendation: Plugin-Free Playback of Premium Content

The recent World Wide Consortium (W3C) Encrypted Media Extensions (EME) Recommendation describes the importance of browser-based content protection for a better user experience when viewing encrypted video on the web. The W3C Media and Entertainment Interest Group has been a key venue for worldwide definition of premium video content delivery requirements. According to W3C:

“EME is an Application Programming Interface (API) that allows plugin-free playback of protected (encrypted) content in Web browsers, which works seamlessly on all major platforms. W3C’s Media Source Extensions (MSE) provides the API for streaming video while its companion Encrypted Media Extensions (EME) provides the API for handling encrypted content.”

As hinted in the announcement, EME is but one piece in achieving a goal of premium content delivery without the use of plugins. Moving web interactions away from plugins into browsers enhances security, privacy and accessibility for consumers and simplifies the development process for web developers.

Consider the past, not so long ago, when no common solution existed for premium content and each company implemented its own browser-specific or plug-in solution. Each solution was different - no content portability across platforms, uneven support for critical viewer features, no common encryption, no common standard or even disclosure for security considerations. Every piece of premium content was wrapped in technology specific to a browser, system vendor and user device.

Premium video content is much more than video

The use of streaming services with encrypted video content has grown exponentially and viewers expectations are high - multiple language audio tracks, subtitles and closed captions, flawless delivery over the best-effort internet, state-of-the-art user interfaces and content that can be viewed across any browser on any device. This is a challenge for content providers. How do they meet expectations without an explosion of complexity and cost in dealing with multiple devices, browsers and network technology?

A common, browser-based content encryption solution is necessary, but by no means sufficient in addressing this challenge. Along with EME, CableLabs and multiple system operators (MSO’s) have initiated and participated in W3C groups to piece together this puzzle.

W3C Documents

It is useful to understand the set of browser features defined by W3C and others, why every feature in this set is required for premium content and why it’s all these features or nothing. These requirements are reflected in many W3C documents, in addition to the EME Recommendation:

- Browser support for multiple audio and video, subtitle and closed caption tracks are defined in the HTML5 Recommendation as a result of these requirements.

- Sourcing In-band Media Tracks defines how content in any format used on the web delivers these tracks in a common, interoperable manner.

- The Media Source Extension Recommendation, MPEG DASH and DASH Industry Forum collectively define the efficient, smooth delivery of time-critical media across the best-effort internet to any browser.

Every one of these features is essential to the industry goal of making the web a first-class platform for media and entertainment.

Encrypted media extensions, along with HTML5 features, media tracks, media source extensions and DASH define common implementations for essential components of premium content. All of these pieces rely on each other; take one away and it's back to the not-so-good-old-days. The W3C has provided an essential service to consumers and providers, and technology partners by enabling create once, use anywhere premium content.

--

Subscribe to our blog and find out more about CableLabs and how we contribute to enhancing the broadband experience.

Labs

The Cost of Codecs: Royalty-Bearing Video Compression Standards and the Road that Lies Ahead

Since the dawn of digital video, royalty-bearing codecs have always been the gold standard for much of the distribution and consumption of our favorite TV shows, movies, and other personal and commercial content. Since the early 90’s, the Moving Picture Expert Group (MPEG) has defined the formats and technologies for video compression that allow all those pixels and frames to be squeezed into smaller and smaller files. For better or for worse, along with these technologies comes the intellectual property of the individuals and organizations that created them. In the United States and in many other countries, this means patents and royalties.

MPEG-LA

The MPEG Licensing Authority (MPEG-LA) was established after the development of the MPEG-2 codec to manage patents essential to the standard and to create a sensible consolidated royalty structure through which intellectual property holders could be compensated. With over 600 patents from 27 different companies considered to be essential to MPEG-2, MPEG-LA simplified the process of negotiating licensing agreements from each patent owner by anyone wishing to develop products utilizing MPEG-2. The royalty structure that MPEG-LA and the patent owners adopted for MPEG-2 was fairly simple: a one-time flat fee per codec. See table below.

The successor codec to MPEG-2, MPEG-4 Advanced Video Coding (AVC), was completed in 2003. Shortly thereafter, MPEG-LA released its licensing terms, which contained per-unit royalties and a ceiling on the amount that any one licensee must pay in a year. However, it seemed that not all parties who claimed to have essential patents for AVC were satisfied with the royalty structure established by MPEG-LA. Thus, a new AVC patent pool, managed by Via Licensing, a spin-off of Dolby Laboratories, Inc., emerged in 2004 and announced their separate licensing terms. This created a significant amount of confusion amongst those trying to license the technology. Would you end up having to license from both parties to protect yourself from future litigation? Some patent owners were in both pools; would you have to pay twice for these patents? In the end, Via Licensing signed an agreement with MPEG-LA and its licensors merged with the MPEG-LA licensors to create a single licensing authority. The royalty structure established by the MPEG-LA group for MPEG-4 added new fees to the mix. In addition to a one-time device fee (includes software and hardware applications), per-subscriber and per-title fees (e.g., VOD, digital media) were added. These additional fees have deterred many cable operators and other MVPDs from adopting MPEG-4. Royalties were also established for over-the-air broadcast services, while free Internet broadcast video (e.g YouTube, Facebook) remained exempt. See table below.

The latest MPEG video codec is called High Efficiency Video Coding (HEVC), and with it comes the latest licensing terms from MPEG-LA. A flat royalty per device is incurred for those shipping over 100,000 devices to end-users. Companies with device volumes under 100,000 will pay no royalties. In addition, the royalty cap for organizations was raised substantially. Most companies distributing HEVC encoders/decoders will not be charged royalties because they will easily fall under the 100,000-unit limit. Larger companies that sell mobile devices (Samsung, LG, etc.), OS vendors (Microsoft), and large media software vendors (Adobe, Google) will likely be the ones paying HEVC royalties. Learning from the lackluster adoption rate of MPEG-4 by content distributors, there are no royalties on content distribution. This seems like a step in the right direction.

The following table details the royalty structures established from the days of MPEG-2 up to the proposed terms for HEVC.

Download PDF version

Trouble on the Horizon

As one might expect, many companies who hold patents that they claim are essential to HEVC were not happy with the MPEG-LA terms and have instead joined a new licensing organization, HEVCAdvance. In early 2015, when HEVCAdvance first announced their licensing terms, it caused quite a stir in the industry. Not only did they have much higher per-device rates, but there was also a very vague “attributable revenue” royalty for content distributors and any other organization that utilizes HEVC to facilitate revenue generation. On top of all that, there were no royalty caps.

In December, 2015, due in no small part to the media and industry backlash that followed the release of the original license terms, HEVCAdvance released a modified terms and royalties summary. Royalties for devices were lowered slightly, but still significantly higher than what MPEG-LA has established – and with no yearly cap. Additionally, there are slightly different royalty rates depending on the type of device (e.g., mobile phone, set-top-box, 4K UHD+ TV). No royalties are assessed for free, over-the-air and Internet broadcast video. However, per-title and per-subscriber royalties remain. See table above. The HEVCAdvance terms also assess royalties with no caps for past sales of per-title and subscription-based units. Finally, there is an incentive program in which early adopters of the HEVCAdvance license can receive limited discounts on royalties for past and future sales.

It is important to note that the companies that participate in each patent pool (HEVCAdvance, MPEG-LA) are not the same. If the two patent pools are not reconciled or merged, licensees may have to sign agreements with both organizations to better protect themselves against infringement. This will result in a substantial financial impact to the device vendors (TVs, Browsers, Operating Systems, Media Players, Blu-Ray Players, Set-Top Boxes, GameConsoles, etc). Of course, content distributors are also unhappy with the HEVCAdvance terms that revert back to the content-based royalties of MPEG-4, and a higher cap of $5M per year. As a result, you may expect the content distributors to pass a portion of their subscription- and title-based royalties on to their customers which results in increased prices for consumers like you and me.

Update (2/3/16): Technicolor announced that they will withdraw from HEVCAdvance and license its patents directly to device manufacturers. While this is an encouraging sign (and good for content distributors), I'm curious as to why they did not just join MPEG-LA. If more and more companies take the route of individual licensing, it may make the process of acquiring licenses for HEVC more complex.

The Royalty-Free Codec Revolution

In response to the growing concerns regarding the costs of using and deploying MPEG-based codecs, numerous companies and organizations have developed, or are planning to develop, alternatives that are starting to show some promise.

Google VPx

Google, through its acquisition of On2 Technologies, has developed the VP8 and VP9 codecs to compete with AVC and HEVC, respectively. Google has released the VP8 codec, and all IP contained therein, under a cross-license agreement that provides users royalty-free rights to use the technology. In 2013, Google signed an agreement with MPEG-LA and 11 MPEG-LA AVC licensors to acquire a license to VP8 essential patents from this group. This makes VP8 a relatively safe choice, legally speaking, for use as an alternative to AVC. The agreement between Google and MPEG-LA also covers one “next-generation” codec. According to my discussions with Google, this is meant to be the VP9 codec. The VP8 cross-license FAQ indicates that a VP9 cross-license is in progress, but nothing official exists to-date.

Most of the content served by Google’s YouTube is compressed with VP8/VP9 technology. Additionally, numerous software and hardware vendors have added native support for VPx codecs. Chrome, Firefox, and Opera browsers all have software-based VP8 and VP9 decoders. Recently, Microsoft announced that they would be adding VP9 decode support to their Edge browser in an upcoming release. On the hardware side, Google has signed agreements with almost every major chipset vendor to add VP8/VP9 functionality to their designs.

From a performance standpoint, we feel that VP8/AVC and VP9/HEVC are very similar. Numerous experiments have been run to try to assess the relative performance of the codecs and, while it is difficult to do an apples-to-apples comparison, they seem very close. If you’d like to look for yourself, here is a sampling the many tests we found:

- https://www.ietf.org/mail-archive/web/rtcweb/current/msg09064.html

- Mukherjee, et al., Proceedings of the International Picture Coding Symposium, pp. 390-393, Dec. 2013, San Jose, CA, USA.

- Grois, et al., Proceedings of the International Picture Coding Symposium, pp. 394-397, Dec. 2013, San Jose, CA, USA.

- Bankoski, et al., Proceedings of IEEE International Conference on Multimedia and Expo, pp. 1-6, July 2011, Barcelona, ES.

- Rerabek, et al., Proceedings of the SPIE Applications of Digital Image Processing, XXXVII, vol. 9217, August 2014, San Diego, CA, USA

- Feller, et al., Proceedings of the IEEE International Conference on Consumer Electronics, pp. 57-61, Sept. 2011, Berlin, DE.

- Kufa, et al., Proceedings of the 25th International Radioelektronika Conference, pp.168-171, April 2015, Pardubice, CZ

- Uhrina, et al., 22nd Telecommunications Forum (TELFOR), pp. 905-908, Nov. 2014, Belgrade, RS

An Alliance of Titans

At least in part in reaction to the HEVCAdvance license announcement in 2015, a collection of the largest media and Internet companies in the world announced that they were forming a group with the sole goal of helping develop a royalty-free video codec for use on the web. The Alliance for Open Media (AOM), founded by Google, Cisco, Amazon, Netflix, Intel, Microsoft, and Mozilla, aims to combine their technological and legal strength to ensure the development of a next-generation compression technology that will be both superior in performance over HEVC and free of charge.

Several of the companies in AOM have already started work on a successor codec. Google has been steadily progressing on VP10. While Cisco has introduced a new codec called Thor. Finally, Mozilla has long been working on a codec named Daala, which takes a drastically different approach to the standard Discrete Cosine Transform (DCT) algorithm used in most compression systems. Cisco, Google, and Mozilla have all stated that they would like to combine the best of their separate technologies into the next generation codec envisioned by AOM. It is likely that this work would be done in the IETF NetVC working group, but not much has been decided as of yet.

The Future is Free?

Not sure we can say this statement with utmost confidence; but it seems clear that the confusing landscape of IP-encumbered video codecs is driving industry towards a future where digital video--the predominant leader in video traffic on the internet--may truly be free from patent royalties.

Greg Rutz is a Lead Architect in the Advanced Technology Group at CableLabs.

Special thanks to Dr. Arianne Hinds, Principal Architect at CableLabs, and Jud Cary, Deputy General Counsel at CableLabs for their contributions to this article.

Consumer

Gadgets Galore in our Comfortable Living Room Lab

Someone once said, “Seeing is Believing.” At CableLabs, we believe that “Doing is Knowing.” With this in mind, we crafted a space in our Sunnyvale, CA facility that we call the Moveable Experience Lab (aka “MEL”).

The MEL simulates a consumer living room. The space has been intentionally designed as a “safe place” to frame your own ideas and opinions about experiences influencing consumers habits today.

Everything in the lab is meant to be touched, used and experimented with – similar to a Discovery Museum for adults. Haven’t tried Oculus Rift, VR or 360 Content? We’ve got it. How about the Amazon Echo? We’ve got it. HBO GO, Xfinity1, Google Glass and smart watches and UHD TV’s…check, check, check and check. The good news? Unlike crowded conference floors or company demos, visitors are not rushed to take their turn or see a limited aspect of the experience. Visitors are encouraged to take as much time with any technology they are interested in so that they can get a full sense of what might be the big “wow” associated with any one of these consumer tech experiences. Further, CableLabs personnel are frequently on hand to offer their own insights – or just to converse about the technology – without the pressure of press or others waiting for you to form an “official” opinion. Sometimes, just talking about what you’ve observed helps to ensure that the learning sticks or could inspire you to have new ideas about new possibilities.

Right now, MEL contains several experience exhibits:

Battle for the Living Room

Experiences for the Sony 65” UHD Display

- Comcast: Xfinity 1 with Voice Remote - demonstrating personalization (guide preference), recommendations, and voice navigation via Comcast Voice Remote.

- AppleTV: Demonstrating uber aggregation and an ultra simplified remote.

- Amazon KindleFire TV: Features an elegant voice remote and categorized discovery guide (with recommendations based on viewing habits).

- Playstation 4 (with 2 gaming controls) featuring Playstation Now: An ecosystem for games, movies, music, etc.

The Evolution of 3D Experiences in Head Mounted Displays/Mobile Devices

This exhibit shows the progression of virtual reality in the head mounted display form factor. This is probably one of the fastest growing areas of technology and, by all counts, is staged to become a significant consumer trend.

- Google Cardboard (used with any smartphone): Google Cardboard was handed out free last year at Google I/O as an example of a low-cost system to encourage interest and development in VR and VR applications.

- Google Glass: 2nd Generation: The last beta generation of Google Glass. Google Glass was a large-scale experiment meant to “free people from looking down at their cell phones — and look up.”

- Oculus Rift (version 2) (non functional): Representation of the state of development in 2012.

- Samsung Gear VR Headset Low Cost Headset (run on Android Phone): Demonstrates growing number of 3D-interactive titles and games as well as 360 Degree content. As a $99 accessory to a smartphone, this product is expected to cause the tipping point that will be instrumental in bringing Virtual Reality experiences to the masses.

Evolution of the Smart Watch

Like the mobile OS battles, Apple and Google are set to compete for the wrists of consumers — and life-style brand status. Visitors to the MEL lab can gain a sense of how quickly the wearable market has been populated and is evolving.

- 2012 - MetaWatch: First smartwatch of the latest wave of Smartwatches

- 2013-14 – AndroidWear Watches (built from same SDK, different Brand UX): Requires android phone to work. Smart watches with apps, tracking, and mobile notifications and a market place of 4K+ apps.

- Motorola 360 – Round Watch: First round interface (note the UX)

- Samsung Gear: Samsung’s interpretation of the Android Smartwatch SDK

- 2015 - AppleWatch (requires iPhone to work): A watch experience aimed at the luxury experience featuring a high-end display, materials, ApplePay and a 8.5K+ apps.

Tablet Experiences (Apps)

- Watchable: Comcast’s new, cross-platform video service that curates a selection of the best content from popular online video networks and shows in an easy-to-use experience — no need for a cable subscription. Specifically curating content from digital content that will appeal to younger generations than standard cable.

- HBONow : OTT viewing experience – available to anyone who wants to run HBO on a mobile device.

- HBOGo: OTT Viewing experience – available to HBO’s premium cable subscribers.

- Xfinity TV Remote: If you don’t want to use the remote control and your tablet is more handy — there’s an app for that!

- Unstaged: American Express – 360 experiences

We believe that having access to a space like MEL helps CableLabs employees establish opinions on consumer technology that are based on facts as well as experience with the real products. These insights enable us to ground our insights and set the stage for innovation beyond what already is on market.

The next time you are in Sunnyvale, make some time to stop by and experience MEL. No appointment is necessary, but be forewarned…sudden attacks of binge-watching have been known to afflict visitors and you might just be inspired to create a product on your own!

Mickie Calkins is the Director of Co-Innovation & Prototyping in the Strategy & Innovation Group at CableLabs.

Video

Active Story: How Virtual Reality is Changing Narratives

I love story, it’s why I got into filmmaking. I love the archetypal form of the written story, the many compositional techniques to achieve a visual story, and using layers of association to tell story with sound. But most of all, I love story because of its capacity to teach. Telling a story to teach an idea, it has been argued, is the most fundamental technology for social progress.

When the first filmmakers were experimenting with shooting a story, they couldn’t foresee using the many creative camera angles that are used today: the films more resembled watching a play. After decades of experimentation, filmmakers discovered more and more about what they could do with the camera, lighting, editing, sound, and special effects, and how a story could be better told as a result. Through much trial and error, film matured into its own discipline.

Like film over a century ago, VR is facing unknown territory and needs creative vision balanced with hard testing. If VR is to be used as a platform for story, it’s going to have to overcome some major problems. As a recent cover story from Time reported, gaming and cinema story practices don’t work in virtual reality.

VR technology is beckoning storytellers to evolve, but there’s one fundamental problem standing in the way: the audience is now in control of the camera. When the viewer is given this power, as James Cameron points out, the experience becomes a gaming environment, which calls for a different method to create story.

Cameron argues that cinematic storytelling can’t work in VR: it’s simply a gaming environment with the goggles replacing the POV camera and joystick. He’s correct, and as long as the technology stays that way, VR story will be no different than gaming. But there’s an elephant in the room that Cameron is not considering: the VR wearable is smart. The wearable is much more than just a camera and joystick, it’s also a recording device for what the viewer is doing and experiencing. And this changes everything.

If storytelling is going to work in VR, creatives need to make a radical paradigm shift in how story is approached. VisionaryVR has come up with a partial solution to the problem, using smart zones in the VR world to guide viewing and controlling the play of content, but story is still in its old form. There’s not a compelling enough reason to move from the TV to the headset in this case. But they’re on the right track.

Enter artificial intelligence.

The ICT at USC has produced a prototype of a virtual conversation with the holocaust survivor, Pinchas Gutter (Video). In order to populate the AI, Pinchas was asked two thousand questions about his experiences. His answers were filmed on the ICT LightStage that also recorded a full 3D video of him. The final product, although not perfect, is the feeling of an authentic discussion with Pinchas about his experience in the holocaust. They’ve used a similar “virtual therapist” to treat PTSD soldiers, and when using the technology alongside real treatment, they’ve achieved an 80% recovery rate, which is incredible. In terms of AI applications, these examples are very compelling use cases.

The near future of VR will include character scripting using this natural language application. Viewers are no longer going to be watching characters, they are going to be interacting with them and talking to them. DreamWorks will script Shrek with five thousand answers about what it’s like when people make fun of him, and when kids use it, parents will be better informed about how to talk to their kids. Perhaps twenty leading minds will contribute to the natural languaging of a single character about a single topic. That’s an exciting proposition, but how does that help story work in VR?

Gathering speech, behavioral, and emotive analytics from the viewer, and applying AI responses to it, is an approach that turns story on its head. The producer, instead of controlling the story from beginning to end, follows the lead of the viewer. As the viewer reveals an area of interest, the media responds in a way that’s appropriate to that story. This might sound like gaming, but it’s different when dealing with authentic, human experience. Shooting zombies is entertaining on the couch, but in the VR world, the response is to flee. If VR story is going to succeed, it must be built on top of real world, genuine experience. Working out how to do this, I believe, is the paradigm shift of story that VR is calling for.

If this is the future for VR, it will change entirely how media is produced, and a new era of story will develop. I’m proposing the term, “Active Story,” as a name for this approach to VR narratives. With this blog, I’ll be expanding on this topic as VR is produced and tested at CableLabs and as our findings reveal new insight.

Eric Klassen is an Associate Media Engineer in the Client Application Technologies group at CableLabs.

Consumer

Who Will Win The Race For Virtual Reality Content?

This is the second of a series of posts on Virtual Reality technology by Steve and others at CableLabs. You can find the first post here.

In the recent study we performed at CableLabs, we asked what would stop people from buying a virtual reality headset. High on the list of items was availability of content. Setting aside VR gaming, people didn’t want to spend money on a device that only had a few (or a few hundred) pieces of content. So there has to be lots. Who is creating content, and how quickly will content show up?

Leave it to the Professionals?

Three companies have emerged as leaders in the space of professional content production:

- JauntVR who just secured another $66M investment

- Immersive Media who worked on the Emmy award-winning Amex Unstaged: Taylor Swift Experience

- NextVR who seemingly wants to become the “Netflix of VR.”

Each company is developing content with top-tier talent, each working to establish brand equity and to develop critical intellectual property. Another thing they have in common is that they have developed their own cameras to capture the content, yet they all say they are not in the business of building cameras.

JauntVR Neo Camera

The new Jaunt Neo camera seems to have 16 sensors around the periphery, which we might expect to yield a 16K x 8K resolution. This is far in excess of what the Samsung GearVR will decode and display (limited to 4K x 2K 30fps, or lower resolution at 60fps), but shows the type of quality experience we might expect for the future. The NextVR camera uses 6 4K RED professional cameras, each of which is a rather expensive proposition before you even buy lenses.

But there seems to be a problem of scaling up. To get to thousands of pieces of professional content we will have to wait for years for the three companies and any other new entrants to scale up their staff, equipment, and get engaged in and produce enough interesting projects.

Or will consumer-generated content win the day?

On the flip side, we have seen the rate at which regular video is created and uploaded to YouTube (300 hours uploaded per minute). All we need is for affordable consumer-grade cameras to show up. The first cameras are already here, though their resolution leaves something to be desired. Brand-name cameras like the Ricoh Theta and the Kodak SP360 are affordable consumer-grade 360° cameras that are around $300, but they only shoot HD video. When the 1920 pixels of the HD video are expanded to fill the full horizon, all that can be seen in the VR headset is less than a quarter of that - or around 380 pixels. Most of the content we used in consumer testing was 4000x2000 pixels of content, and even that showed up as fairly low resolution. There are some some startup names entering the scene (like Bublcam and Giroptic), but these are not shipping yet, and don’t advance beyond HD video for the full sphere of what you see. Perhaps more interesting in terms of quality are cameras coming from Sphericam and Nokia that promise 4K video at 60 frames per second, and an updated Kodak camera that supports 4K. These cameras reach a little beyond what the Samsung GearVR headset can decode (which is 4K 30 fps) but are a little beyond the consumer price point, entering the market at $1500. Oddly missing from the consumer arena here is GoPro, but I think we can reasonably predict a consumer-grade 360° camera from them late in 2015 or early 2016 that is a little more manageable than the 16-GoPro Google Jump camera, or the 6-camera rigs that hobbyists have been using to create the early content.

YouTube and Facebook 360 are already here

Facebook launched support for 360° videos in timelines just last week. YouTube launched 360° support in March, and in six months already has about 14,000 spherical videos, with few mainstream cameras available. I strongly suspect that user-generated content will massively out-number professionally produced 360° content. Out studies suggested that while consumers guessed they would be willing to wear a VR headset for 1-2 hours, short-form content would be favored. This matches the predominant YouTube content length well. While Samsung has created their own video browsing app (MilkVR), a YouTubeVR application is sure to emerge soon, allowing us all to spend hours consuming the 360 videos in a VR headset.

What Content Appeals?

Shooting interesting 360° content is hard. There is nowhere to hide the camera operator. Everything is visible, and traditional video production focuses on a “stage” that the director populates with the action he or she wants you to see. We had a strong inkling that live sports and live music concerts would be compelling types of content, and might be experiences people would value enough to pay for. We tried several different types of content when we tested VR with consumers, trying to cover the bases of sports, music, travel and “storytelling” - fiction in short or long form. We were surprised to see the travel content we chose as the clear winner, leaving all other content categories in the dust on a VR headset. We gained lots of insights into the difficulty of producing good VR video content (like not moving the camera, and not doing frequent scene cuts), and the difficulty of creating great storytelling content that really leverages the 360° medium. Let’s just say I am not expecting vast libraries of professionally produced 360 movies any time soon.

The ingenuity of the population in turning YouTube into the video library of everything is likely to play out here too, with lots of experimentation and new types of content becoming popular. The general public, through trying lots of different things, is most likely to find the answers for us. For that to happen, mass adoption of VR headsets has to take place, and consumer 360 cameras have to become cheap and desirable. Samsung announced the “general public” version of their headset at the Oculus Connect 2 last week, with a price point of $99 over the cost of their flagship phones. Oculus expects to ship over 1 million headsets in their first year of retail availability. Cameras are already out there, inexpensive, and getting better. In 12 months I expect over 100,000 360° videos to be published on YouTube.

Is the killer app for VR… TV?

Netflix just launched their app on the GearVR. Here at my desk I can watch movies and TV episodes on a VR headset, sitting in a ski lodge with views out the window of ski runs. I’m sitting in front of a (virtual) 105” TV, enjoying an episode of Sherlock. The screen quality is pretty grainy, but I quickly forget that and become engrossed in the episode -- until my boss taps me on the shoulder and tells me to get back to work. I thought this was a pretty dumb use case for the technology, but I was wrong. Dead wrong. Only problem is I can’t see the popcorn to pick it up and try to get it into a mouth I can’t see. There is lots to ponder here.

Steve Glennon is a Principal Architect in the Advanced Technology Group at CableLabs.

Consumer

Is Virtual Reality the Next Big Thing?

In the Advanced Technology Group at CableLabs I get to play with all the best toys, trying to work out what is a challenge or an opportunity, and what is a flop waiting to be unwrapped. 3D is a great case study. I first got to work with 3D TV in 1995 (in a prior life), with an early set of shutter glasses and a PC display. Back then it faced the chicken and egg problem - no TVs, and no content. Which would come first?

I still remember the first 3D video I saw in 1995. It was high quality, high frame rate, and beautiful, but not compelling. I still didn’t find it compelling in 2010 when the 3D TV/content logjam was broken.

I remember the first time I saw Virtual Reality video at CES in January 2014. My reactions were starkly different. The first VR Video I saw was grainy, blurry, and a little jerky. And it was incredibly compelling. I was hooked.

You can’t talk about VR - you have to experience it

Lots of people talk about VR - they “get it” in their heads. You see video playing, and you can look all around. And you can look stupid with a headset on. But you don’t really get it until you experience some good content in a good headset.

This doesn’t convey what it feels like see elephants up close. Everyone loves elephants, and with a headset you feel like you are meeting one for the first time, in their natural habitat.

And this doesn’t convey the reaction of stepping back to avoid getting wet feet, with icy cold Icelandic water.

So we have been doing a couple of things. We have been showing the headsets to people at the CableLabs conferences, where we got almost half the attendees to try on a headset and experience it first hand. We also wanted to put some market research behind it, so we designed some consumer panels to get regular consumer feedback across a representative demographic cross-section.

VR Is Only For Gamers. Or Not.

A lot of the early focus for the Oculus Rift (and Crystal Cove) headset has been around gamers and gaming. It is the perfect add-on for the gamer, where every frame is rendered in real time and you can go beyond “looking around” on the PC screen to just looking around. I had the pleasure of getting a tour and demo of the HTC Vive headset at Valve’s headquarters in Bellevue, and there you can walk around in addition to looking around. It is the closest thing to a Holodeck that I have ever experienced. I think we can reasonably expect these to go mainstream within the gaming community.

But the consumption of 360° video has much more importance to cable, as mentioned by Phil McKinney in this Variety interview, because of the need for high bandwidth to deliver the content. We’ll look at that in more detail in a future blog post.

Rather than use our own tech-geek, early-adopter attitudes to evaluate this, we wanted to get a representative sample of the general population and ask their opinions. So that’s what we did. With some excellent 360° video content from Immersive Media, a former Innovation Showcase participant, we asked regular people, with regular jobs. Like teachers, nurses, students, physical therapists, personal trainers, and a roadie. We tried to come up with some different display technologies to compare against, and showed them the Samsung GearVR with the content. Here’s what they told us:

57% “Had to have it”. 88% could see themselves using a head-mounted display within 3 years. Only 11% considered the headset to be either uncomfortable or very uncomfortable. 96% of those who were cost sensitive would consider a purchase at a $200 price point (the GearVR is a $200 add-on to the Samsung Galaxy Note 4 or Galaxy S6 phone).

So this seems overwhelmingly positive. There is the novelty factor to take into account, but we were surprised by how few expressed any discomfort and how positively regular people described the experience.

“VR is the most Important Development since the iPhone”

I had the distinct pleasure of spending time on stage with Robert Scoble during his keynote at the recent CableLabs Summer Conference. We discussed the state of the art in head-mounted displays, 360° cameras and content (we’ll talk more about that in later blog posts) but he expressed this sentiment (paraphrased): “Virtual reality is the most important technology development since the original iPhone”. I hadn’t thought about it that way, and now I agree with him. This is not just hot and sexy, a passing fad. It has massive potential to transform lots of what we do, and we can all expect incredible developments in this space.

I want to meet Palmer Luckey

Palmer Luckey created his first prototype head-mounted display at age 18. Four years later he sold Oculus to Facebook for $2 billion, having dropped out of school to build more prototypes. I didn’t see the Facebook deal coming, and didn’t understand it. Now I get it. I want to meet him and thank him for transforming our lives in ways we cannot imagine. We just haven’t witnessed it yet. We will explore more in the next few posts.

Steve Glennon is a Principal Architect in the Advanced Technology Group at CableLabs.

Technical Blog

Content Creation Demystified: Open Source to the Rescue

There are four main concepts involved in securing premium audio/visual content:

- Authentication

- Authorization

- Encryption

- Digital Rights Management (DRM)

Authentication is the act of verifying the identity of the individual requesting playback (e.g. username/password). Authorization involves ensuring that individual’s right to view that content (e.g. subscription, rental). Encryption is the scrambling of audio/video samples; decryption keys (licenses) must be acquired to enable successful playback on a device. Finally, DRM systems are responsible for the secure exchange of encryption keys and the association of various “rights” with those keys. Digital rights may include things such as:

- Limited time period (expiration)

- Supported playback devices

- Output restrictions (e.g. HDMI, HDCP)

- Ability to make copies of downloaded content

- Limited number of simultaneous views

This article will focus on the topics of encryption and DRM and describe how open source software can be used to create protected, adaptive bitrate test content for use in evaluating web browsers and player applications. I will close this article by describing the steps for how protective streams can be created.

One Encryption to Rule Them All

Encryption is the process of applying a reversible mathematical operation to a block of data to disguise its contents. Numerous encryption algorithms have been developed, each with varying levels of security, inputs and outputs, and computational complexity. In most systems, a random sequence of bytes (the key) is combined with the original data (the plaintext) using the encryption algorithm to produce the scrambled version of the data (the ciphertext). In symmetric encryption systems, the same key used to encrypt the data is also used for decryption. In asymmetric systems, keys come in pairs; one key is used to encrypt or decrypt data while its mathematically related sibling is used to perform the inverse operation. For the purposes of protecting digital media, we will be focusing solely on symmetric key systems.

With so many encryption methods to choose from, one could reasonably expect that web browsers would only support a small subset. If the sets of algorithms implemented in different browsers do not overlap, multiple copies of the media would have to be stored on streaming servers to ensure successful playback on most devices. This is where the ISO Common Encryption standards come in. These specifications identify a single encryption algorithm (AES-128) and two block chaining modes (CTR, CBC) that all encryption and decryption engines must support. Metadata in the stream describes what keys and initialization vectors are required to decrypt media sample data. Common Encryption also supports the concept of “subsample encryption”. In this situation, unencrypted samples are interspersed within the total set of samples and Common Encryption metadata is defined to describe the byte offsets where encrypted samples begin and end.

In addition to encryption-based metadata, Common Encryption defines the Protection Specific System Header (PSSH). This bit of metadata is an ISOBMFF box structure that contains data proprietary to a particular DRM that will guide the DRM system in retrieving the keys that are needed to decrypt the media samples. Each PSSH box contains a “system ID” field that uniquely identifies the DRM system to which the contained data applies. Multiple PSSH boxes may appear in a media file indicating support for multiple DRM systems. Hence, the magic of Common Encryption; a standard encryption scheme and multi-DRM support for retrieval of decryption keys all within a single copy of the media.

The main Common Encryption standard (ISO 23001-7) specifies metadata for use in ISO BMFF media containers. ISO 23001-9 defines Common Encryption for the MPEG Transport Stream container. In many cases, the box structures defined in the main specification are simply inserted into MPEG TS packets in an effort avoid introducing completely new structures that essentially hold the same data.

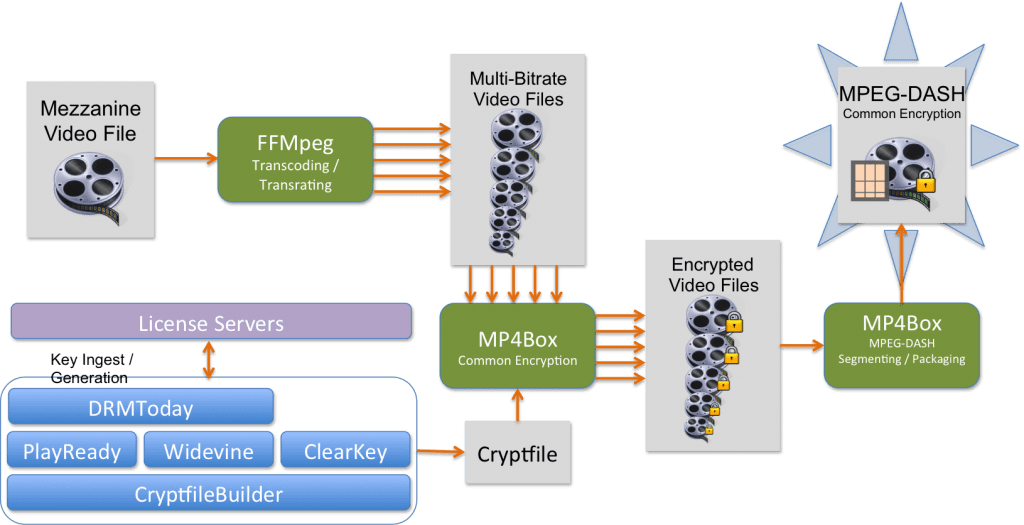

Creating Protected Adaptive Bitrate Content

CableLabs has developed a process for creating encrypted MPEG-DASH adaptive bitrate media involving some custom software combined with existing open source tools. The following sections will introduce the software and go through the process of creating protected streams. These tools and some accompanying documentation are available on GitHub. A copy of the documentation is also hosted here.

Adaptive Bitrate Transcoding

The first step in the process is to transcode source media files into several, lower bitrate versions. This can be simply reducing the bitrate, but in most cases the resolution should be lowered. To accomplish this, we use the popular FFMpeg suite of utilities. FFMpeg is a multi-purpose audio/video recorder, converter, and streaming library with dozens of supported formats. An FFMpeg installation will need to have the x264 and fdk_aac codec libraries enabled. If the appropriate binaries are not available, it can be built .

CableLabs has provided an example script that can be used as a guide to generating multi-bitrate content. There are some important items in this script that should be noted.

One of the jobs of an ABR packager is to split the source stream up into “segments.” These segments are usually between 2 and 10 seconds in duration and are in frame-accurate alignment across all the bitrate representations of media. For bitrate switching to appear seamless to the user, the player must be able to switch between the different bitrate streams at any segment boundary and be assured that the video decoder will be able to accept the data. To ensure that the packager can split the stream at regular boundaries, we need to make sure that our transcoder is inserting I-Frames (video frames that have no dependencies on other frames) at regular intervals during the transcoding process. The following arguments to x264 in the script accomplish that task:

[highlighter line=0]

-x264opts "keyint=$framerate:min-keyint=$framerate:no-scenecut"

We use the framerate detected in the source media to instruct the encoder to insert new I-Frames at least once ever second. Assuming our packager will segment using an integral number of seconds, the stream will be properly conditioned. The no-scenecut argument tells the encoder not to insert random I-Frames when it detects a scene change in the source material. We detect the framerate of the source video using the ffprobe utility that is part of FFMpeg.

framerate=$((`./ffprobe $1 -select_streams v -show_entries stream=avg_frame_rate -v quiet -of csv="p=0"`))

At the bottom of the script, we see the commands that perform the transcoding using bitrates, resolutions, and codec profile/level selections that we require.

transcode_video "360k" "512:288" "main" 30 $2 $1 transcode_video "620k" "704:396" "main" 30 $2 $1 transcode_video "1340k" "896:504" "high" 31 $2 $1 transcode_video "2500k" "1280:720" "high" 32 $2 $1 transcode_video "4500k" "1920:1080" "high" 40 $2 $1 transcode_audio "128k" $2 $1 transcode_audio "192k" $2 $1

For example, the first video representation is 360kb/s with a resolution of 512x288 pixels using AVC Main Profile, Level 3.0. The other thing to note is that the script transcodes audio and video separately. This is due to the fact that the DASH-IF guidelines forbid multiplexed audio/video segements (see section 3.2.1 of the DASH-IF Interoperability Points v3.0 for DASH AVC/264).

Encryption

Next, we must encrypt the video and/or audio representation files that we created. For this, we use the MP4Box utility from GPAC. MP4Box is perfect for this task because it supports the Common Encryption standard and is highly customizable. Not only will perform AES-128 CTR or CBC mode encryption, but it can do subsample encryption and insert multiple, user-specified PSSH boxes into the output media file.

To configure MP4Box for performing Common Encryption, the user creates a “cryptfile”. The cryptfile is an XML-based description of the encryption parameters and PSSH boxes. Here is an example cryptfile:

The top-level <GPACDRM> element indicates that we are performing AES-128 CTR mode Common Encryption. The <DRMInfo> elements describe the PSSH boxes we would like to include. Finally, a single <CrypTrack> elements is specified for each track in the source media we would like to encrypt. Multiple encryption keys for each track may be specified, in which case the number of samples to encrypt would be indicated with a single key before moving to the next key in the list .

Since PSSH boxes are really just containers for arbitrary data, MP4Box has defined a set of XML elements specifically to define bitstreams in the <DRMInfo> nodes. Please see the MP4Box site for a complete description of the bitstream description syntax.

Once the cryptfile has been generated, you simply pass it as an argument on the command line to MP4Box. We have created a simple script to help encrypt multiple files at once (since you may most likely be encrypting each bitrate representation file for your ABR media).

Creating MP4Box Cryptfiles with DRM Support

In any secure system, the keys necessary to decrypt protected media will most certainly be kept separate from the media itself. It is the DRM system’s responsibility to retrieve the decryption keys and any rights afforded to the user for that content by the content owner. If we wish to encrypt our own content, we will also need to ensure that the encryption keys are available on a per-DRM basis for retrieval when the content is played back.

CableLabs has developed custom software to generate MP4Box cryptfiles and ensure that the required keys are available on one or more license servers. The software is written in Java and can be run on any platform that supports a Java runtime. A simple Apache Ant buildfile is provided for compiling the software and generating executable JAR files. Our tools currently support the Google Widevine and Microsoft PlayReady DRM systems with a couple of license server choices for each. The first Adobe Access CDM is just now being released in Firefox and we expect to update the tools in the coming months to support Adobe DRM. Support for W3C ClearKey encryption is also available, but we will focus on the commercial DRM systems for the purposes of this article.

The base library for the software is CryptfileBuilder. This set of Java classes provides abstractions to facilitate the construction and output of MP4Box cryptfiles. All other modules in the toolset are dependent upon this library. Each DRM-specific tool has detailed documentation available on the command line (-h arg) and on our website.

Microsoft PlayReady Test Server

The code in our PlayReady software library provides 2 main pieces of functionality:

- PlayReady PSSH generator for MP4Box cryptfiles

- Encryption key generator for use with the Microsoft PlayReady test license server.

Instead of allowing clients to ingest their own keys, the license server uses an algorithm based on a “key seed” and a 128-bit key ID to derive decryption keys. The algorithm definition can be found in this document from Microsoft (in the section titled “Content Key Algorithm”). Using this algorithm, the key seed used by the test server, and a key ID of our choosing, we can derive the content key that will be returned by the server during playback of the content.

Widevine License Portal

Similar to PlayReady, our Widevine toolset provides a PSSH generator for the Widevine DRM system. Widevine, however, does not provide a generic test server like the one from Microsoft. Users will need to contact Widevine to get their own license portal on their servers. With that portal, you will get a signing key and initialization vector for signing your requests. You provide this information as input to the Widevine cryptfile generator.

The Widevine license server will generate encryption keys and key IDs based on a given “content ID” and media type (e.g. HD, SD, AUDIO, etc). Their API has been updated since our tools were developed and they now support ingest of “foreign keys” that our tool could generate itself, but we don’t currently support that.

DRMToday

The real power of Common Encryption is made apparent when you add support for multiple DRM systems in a single piece of content. With the license servers used in our previous examples, this was not possible because we were not able to select our own encryption keys (as explained earlier, Widevine has added support for “foreign keys”, but our tools have not been updated to make use of them). With that in mind, a new licensing system is required to provide the functionality we seek.

CableLabs has partnered with CastLabs to integrate support for their DRMToday multi-DRM licensing service in our content creation tools. DRMToday provides a full suite of content protection services including encryption and packaging. For our needs, we only rely on the multi-DRM licensing server capabilities. DRMToday provides a REST API that our software uses to ingest Common Encryption keys into their system for later retrieval by one of the supported DRM systems.

MPEG-DASH Segmenting and Packaging

The final step in the process is to segment our encrypted media files and generate a MPEG-DASH manifest (.mpd). For this, we once again use MP4Box, but this time we use the -dash argument. There are many options in MP4Box for segmenting media files, so please run MP4Box -h dash to see the full list of configurations.

For the purposes of this article, we will focus on generating content that meets the requirements of the DASH-IF DASH-AVC264 “OnDemand” profile. Our repository contains a helper script that will take a set of MP4 files and generate DASH content according to the DASH-IF guidelines. Run this script to generate your segmented media files and produce your manifest.

Greg Rutz is a Lead Architect at CableLabs working on several projects related to digital video encoding/transcoding and digital rights management for online video.

This post is part of a technical blog series, "Standards-Based, Premium Content for the Modern Web".

Technical Blog

Web Media Playback: Solved with dash.js

(Disclaimer: the author is a regular contributor to the dash.js project)

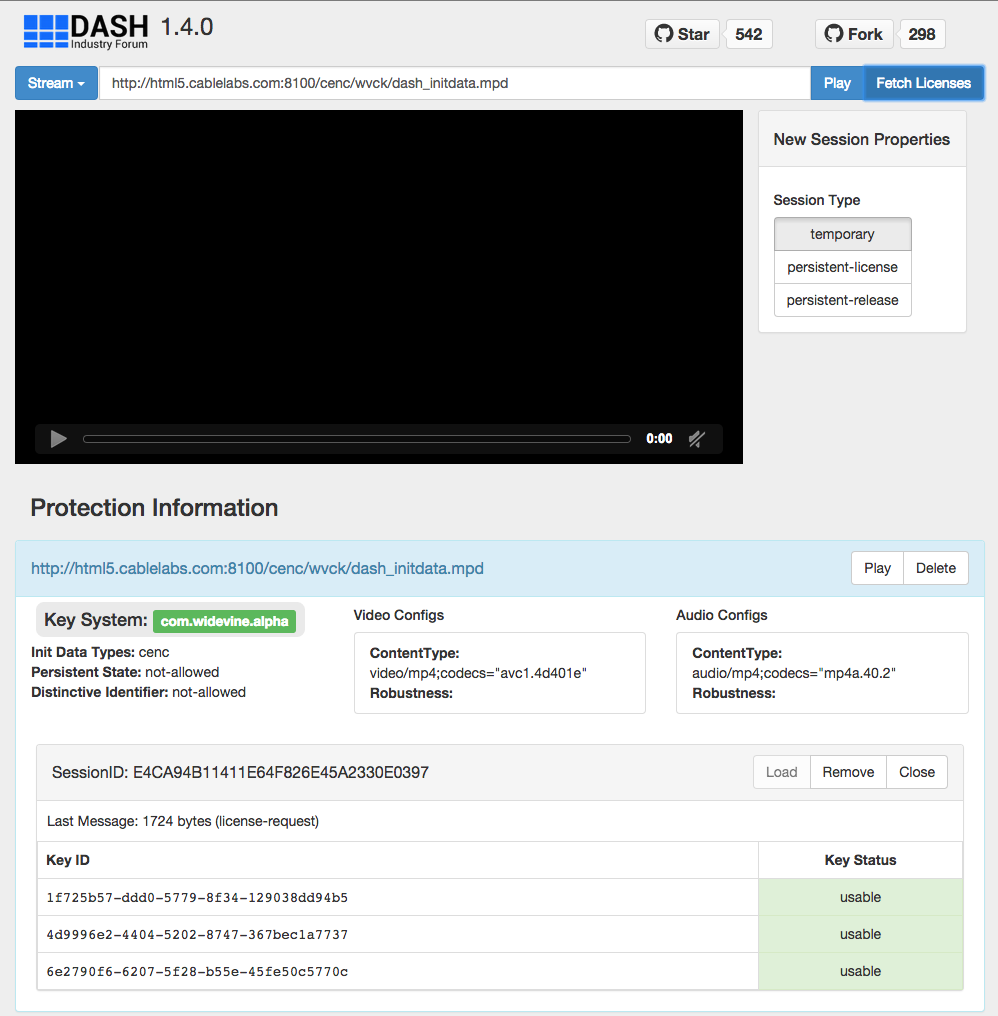

A crucial piece of any standards development process is the creation of a reference implementation to validate the feasibility of the many requirements set forth within the standard. After all, it makes no sense to create a standard if it is impossible to create fully compliant products. The DASH Industry Forum recognized this and created the dash.js project.

dash.js is an open-source, JavaScript media player library coupled with a few example applications. It relies on the W3C Media Source Extensions (MSE) for adaptive bitrate playback and Encrypted Media Extensions (EME) for protected content support. While dash.js started out as a reference implementation, it is has been adopted by many organizations for use in commercial products. The DASH-IF Interoperability Points specification has achieved relative stability with respect to the base functionality, so the development team is focused on adding features and improving performance to further increase its usefulness to companies producing web media players.

One of the benefits of dash.js is in its richness of features. It supports both live and on-demand content, multi-period manifests, and subtitles, to name a few. The player is highly extensible with an adaptive-bitrate rules engine, configurable buffer scheduling, and a metrics reporting system. The example application provided with the library source displays the current buffer levels and current representation index for both audio and video, and it allows the user to manually initiate bitrate changes or let the player rules handle it automatically. Finally, the app contains a buffer level graph, manifest display and debug logging window.

CableLabs has been an active contributor to the dash.js project. Much of the EME support in dash.js was designed and implemented by CableLabs to ensure that the application will support the wide variety of API implementations found in production desktop web browsers today. Additionally, CableLabs has created and hosted test content in the dash.js demo application to ensure that others can observe the dash.js EME implementation in action and evaluate support for protected media on their target browsers.

Content Protection

The media library contains extensive support for playback of protected content. The EME specification has seen many modifications and updates over the years and browser vendors have selected various points during its development to release their products. In order to support as many of these browsers as possible, dash.js has developed a set of APIs (MediaPlayer.models.ProtectionModel) as an abstraction layer to interface with the underlying implementation, whatever that may be. The APIs are designed to mimic the functionality of the most recent EME spec. Several implementations of this API have been developed to translate back to the EME versions that were found in production browsers. The media player will detect browser support and instantiate the proper JavaScript class automatically.

The MediaPlayer.models.ProtectionModel and MediaPlayer.controllers.ProtectionController classes provide the application with access to the EME system both inside and outside the player. ProtectionModel provides management of MediaKeySessions and protection system event notification for a single piece of content. Most actions performed on the model are made using ProtectionController. Applications can use the media player library to instantiate these classes outside of the playback environment to pre-fetch licenses. Once licenses have been pre-fetched, the app can attach the protection objects to the player to associate the licenses with the HTMLMediaElement that will handle playback.

An EME demo app is provided with dash.js (samples/dash-if-reference-player/eme.html) that provides some visibility and control into the EME operations taking place in the browser. The app allows the user to pre-fetch licenses, manage key session persistence, and playback associated content. It also shows the selected key system, and the status of all licenses associated with key sessions.

Greg Rutz is a Lead Architect at CableLabs working on several projects related to digital video encoding/transcoding and digital rights management for online video.

This post is part of a technical blog series, "Standards-Based, Premium Content for the Modern Web".