Convergence

Revolutionizing Telecommunications with Intent-Based Quality on Demand APIs

In today’s digitally connected world, where everyone expects high-speed and reliable connections for their data-intensive activities, quality of service (QoS) plays the starring role. Think of QoS as the traffic cop of the digital highway, ensuring that data gets where it needs to go smoothly and quickly. For service providers, QoS is more than just traffic management. It’s a way to ensure customer satisfaction, differentiate their services and ultimately succeed in the ever-competitive telecommunications market.

The Game-Changing Extension

Details of the CAMARA Quality on Demand API

Details About QoS Profile Attributes

Benefits of Open Source

Next Steps

Convergence

Up Ahead: Better Connections, Happier Users and Lower Costs

Whether at home, in the office, at a coffee shop or on the go, we all expect a dependable internet connection. Even when we're offered bundled services that combine wireless and wireline network access, uninterrupted connectivity often isn't as straightforward as it sounds. Simply providing connectivity over multiple access network types isn't sufficient for meeting customers’ expectations, and managing these as independent networks drives operational costs and complexity.

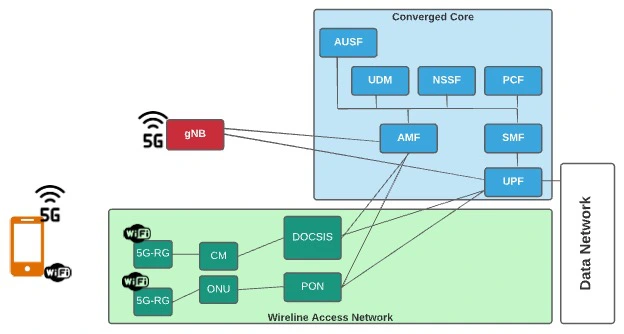

How Wireless-Wireline Convergence Works

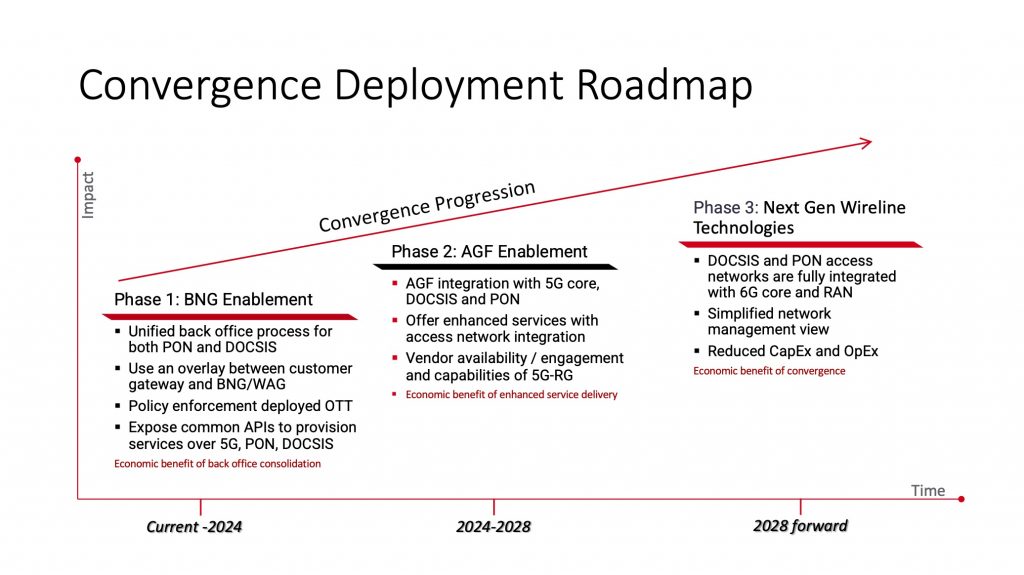

When Will Network Convergence Become a Reality?

Security

Transparent Security Outperforms Traditional DDoS Solution in Lab Trial

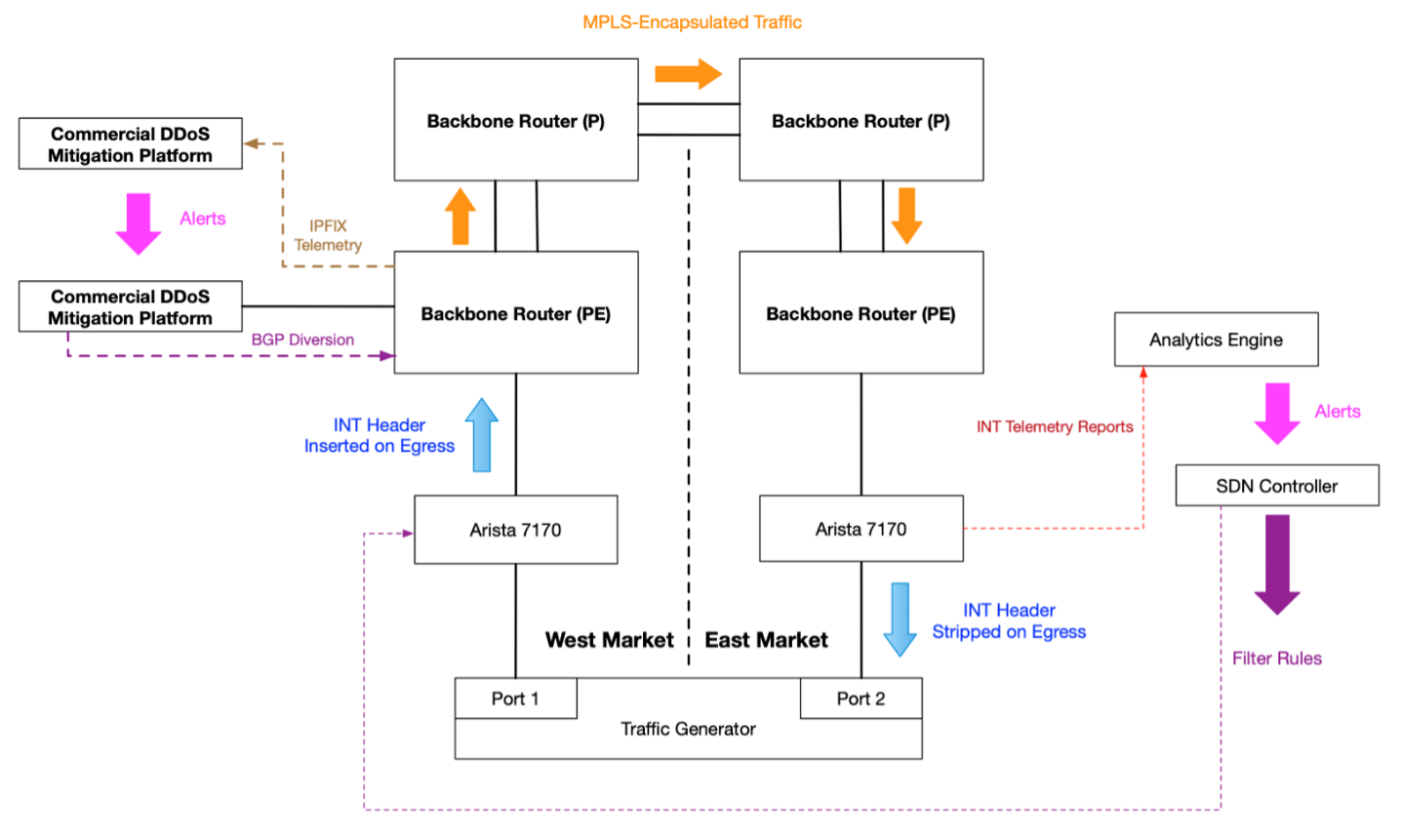

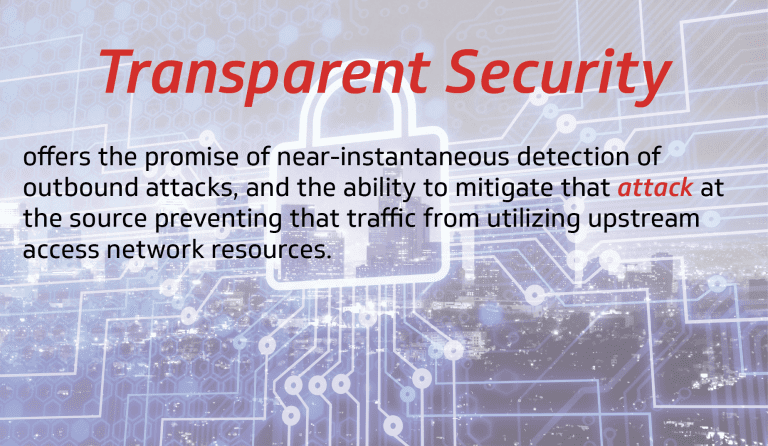

Transparent Security is an open-source solution for identifying and mitigating distributed denial of service (DDoS) attacks and the devices (e.g., Internet of Things [IoT] sensors) that are the source of those attacks. Transparent Security is enabled through a programmable data plane (e.g., “P4”-based) and uses in-band network telemetry (INT) technology for device identification and mitigation, blocking attack traffic where it originates on the operator’s network.

The History and Updates of Transparent Security

Why Cox Is Interested

Lab Trial Setup

Results

Conclusion and Next Steps

Virtualization

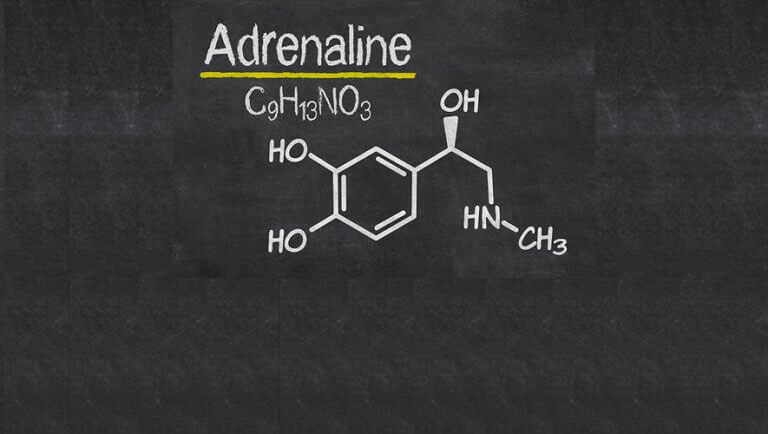

Give your Edge an Adrenaline Boost: Using Kubernetes to Orchestrate FPGAs and GPU

Over the past year, we’ve been experimenting with field-programmable gate arrays (FPGAs) and graphics processing units (GPUs) to improve edge compute performance and reduce the overall cost of edge deployments.

New Features

Hardware Acceleration

Accelerator Installation Challenges

Co-Innovation

Extending Project Adrenaline

Security

Vaccinate Your Network to Prevent the Spread of DDoS Attacks

CableLabs has developed a method to mitigate Distributed Denial of Service (DDoS) attacks at the source, before they become a problem. By blocking these devices at the source, service providers can help customers identify and fix compromised devices on their network.

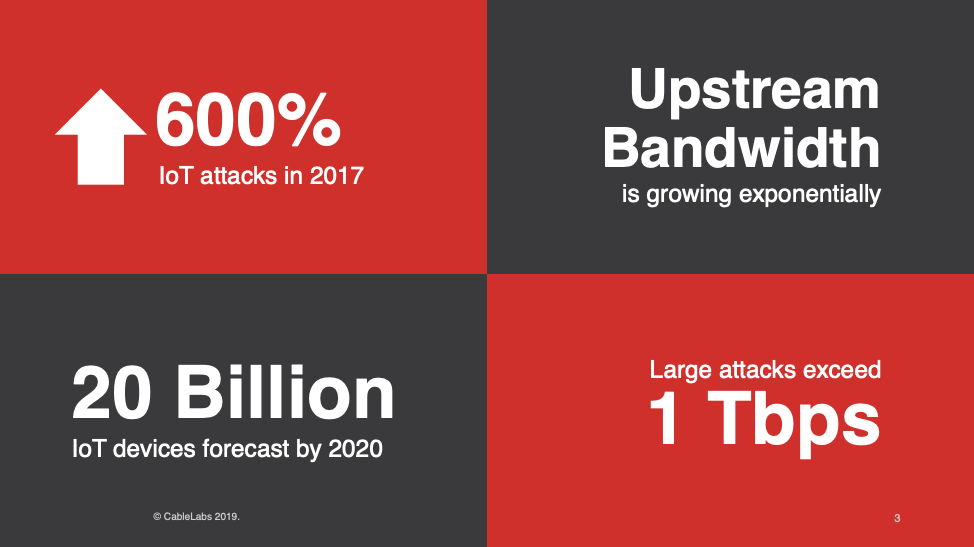

DDoS Is a Growing Threat

Enabled by the Programmable Data Plane

Comparison Against Traditional DDoS Mitigation Solutions

Just the Beginning

Join the Project

Virtualization

CableLabs Announces SNAPS-Kubernetes

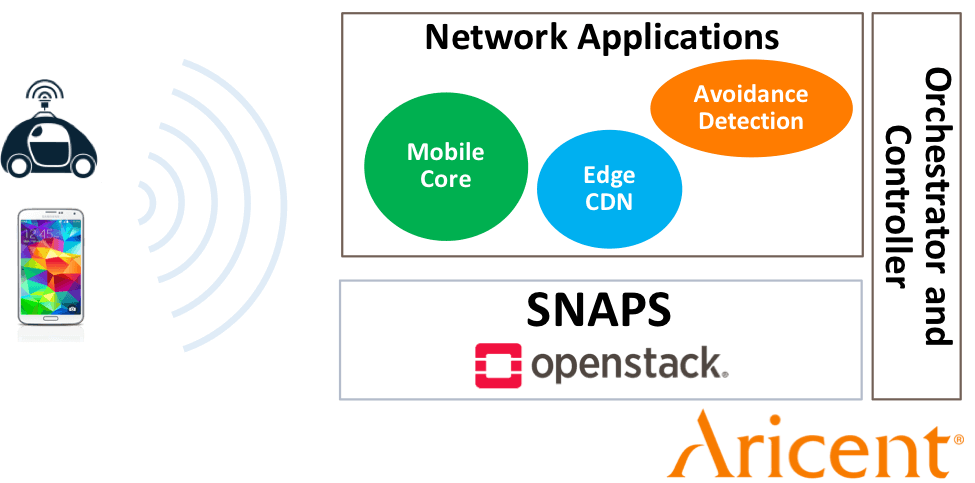

Today, I’m pleased to announce the availability of SNAPS-Kubernetes. The latest in CableLabs’ portfolio of open source projects to accelerate the adoption of Network Functions Virtualization (NFV), SNAPS-Kubernetes provides easy-to-install infrastructure software for lab and development projects. SNAPS-Kubernetes was developed with Aricent and you can read more about this release on their blog here.

Member Impact

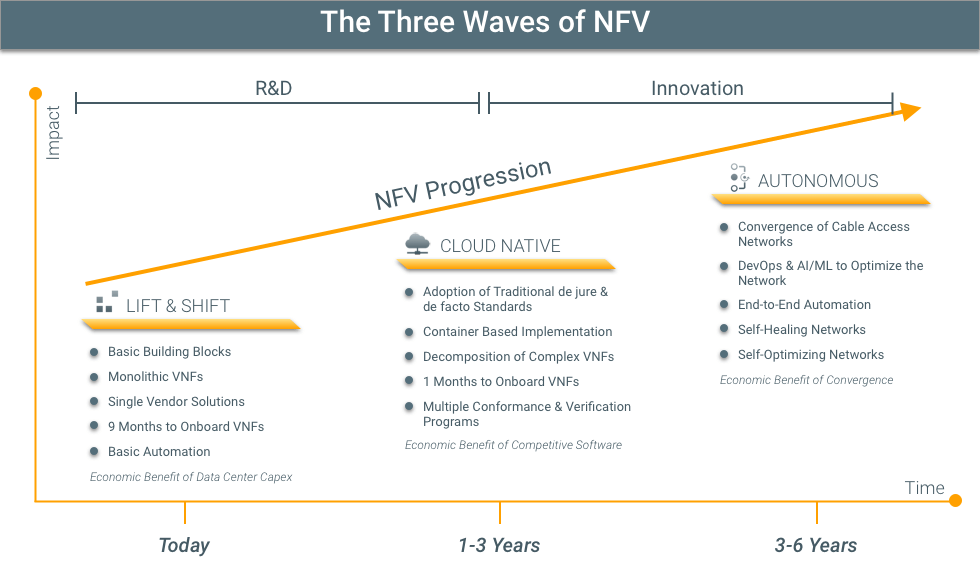

Three Waves of NFV

Lift & Shift

Cloud Native

Autonomous Networks

Features

Next Steps

Try It Today

Have Questions? We’d Love to Hear from You

Virtualization

CableLabs Announces SNAPS-Boot and SNAPS-OpenStack Installers

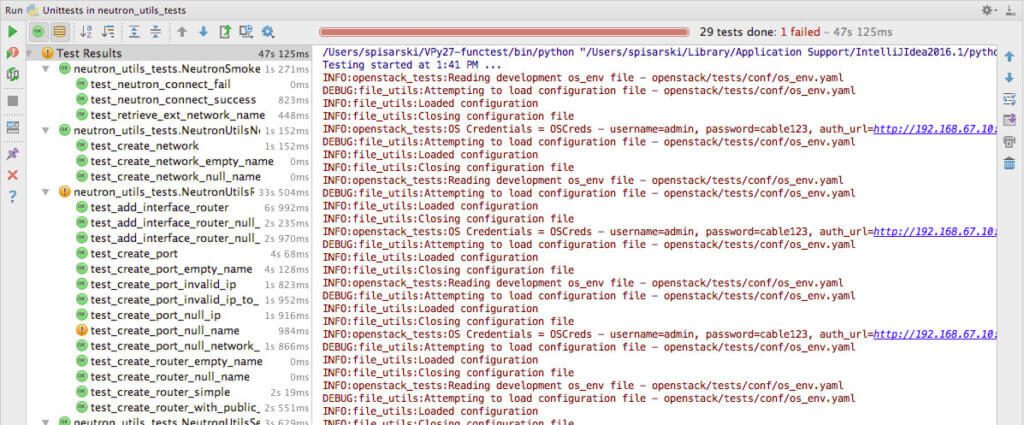

After living and breathing open source since experimenting in high school, there is nothing as sweet as sharing your latest project with the world! Today, CableLabs is thrilled to announce the extension of our SNAPS-OO initiative with two new projects: SNAPS-Boot and SNAPS-OpenStack installers. SNAPS-Boot and SNAPS-OpenStack are based on requirements generated by CableLabs to meet our member needs and drive interoperability. The software was developed by CableLabs and Aricent.

SNAPS-Boot

SNAPS-OpenStack

How you can participate:

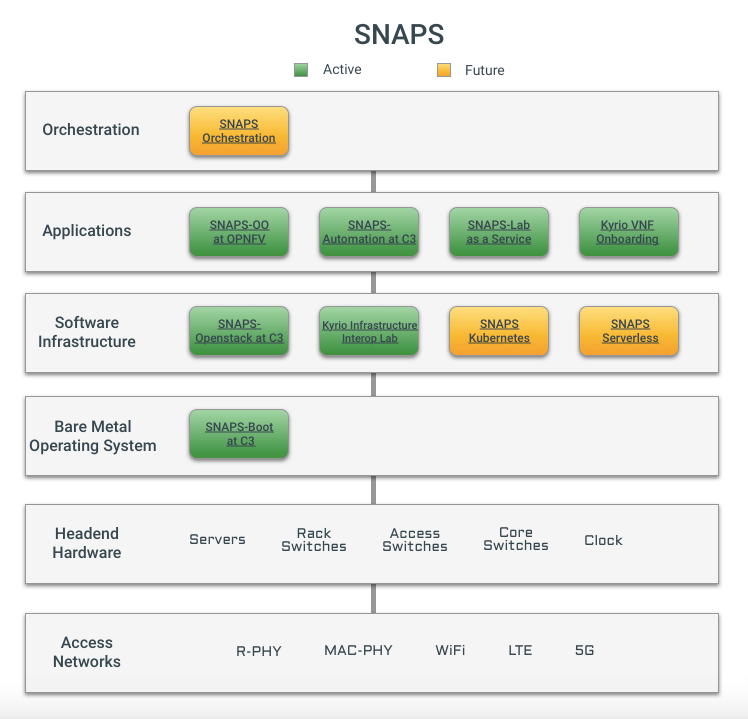

Why SNAPS?

Who benefits from SNAPS?

How we developed SNAPS:

Where the future of SNAPS is headed:

Have Questions? We’d love to hear from you

Virtualization

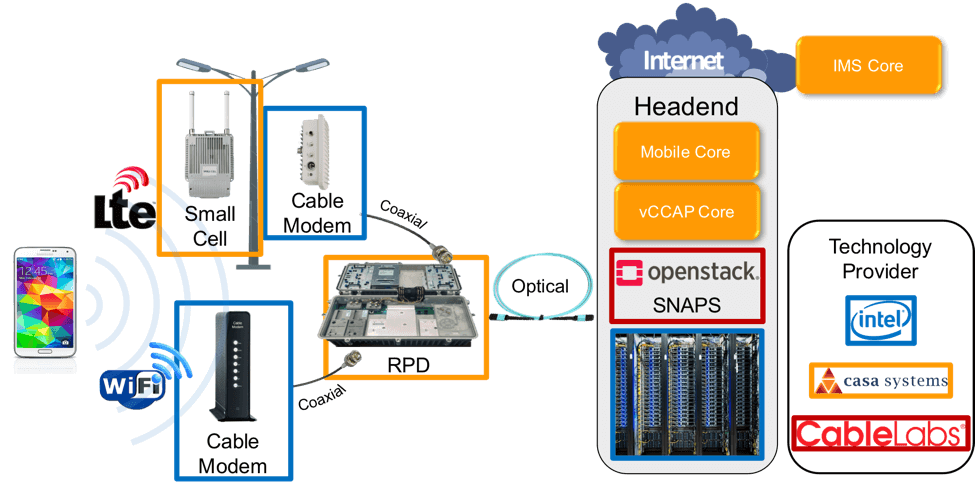

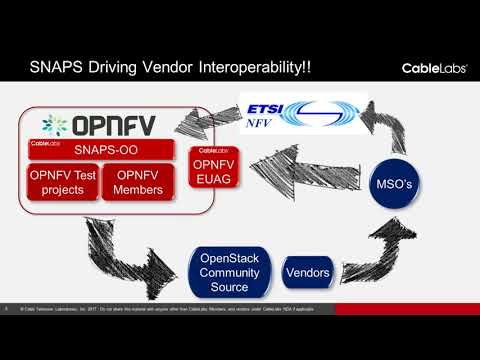

NFV for Cable Matures with SNAPS

SNAPS is improving the quality of open source projects associated with the Network Functions Virtualization (NFV) infrastructure and Virtualization Infrastructure Managers (VIM) that many of our members use today. In my posts, SNAPS is an Open Source Collaborative Development Resource and Snapping Together a Carrier Grade Cloud, I talk about building tools to test the NFV infrastructure. Today, I’m thrilled to announce that we are deploying end-to-end applications on our SNAPS platform.

Background

Why SNAPS is unique

Webinar: Proof of Concepts

Casa and Intel: Virtual CCAP and Mobile Cores

Advantages of Kyrio’s NFV Interop Lab

Aricent: Low Latency and Backhaul Optimization

Virtualization

5 Things I Learned at OpenStack Summit Boston 2017

Recently, I attended OpenStack Summit in Boston with more than 5,000 other IT business leaders, cloud operators and developers from around the world. OpenStack is the leading open source software run by enterprises and public cloud providers and is increasingly being used by service providers for their NFV infrastructure. Many of the attendees are operators and vendors who collaboratively develop the platform to meet an ever-expanding set of use cases.

1. Edward Snowden's Opinions on Security and Open Source

2. OpenStack is Helping Make the World Safer

3. Lightweight OpenStack Control Planes for Edge Computing

4. Aligning Container and Virtual Machine Technologies

5. Data Plane Acceleration

Virtualization

SNAPS-OO is an Open Sourced Collaborative Development Resource

In a previous blog, I have provided an overview of the SNAPS platform which is CableLabs’ SDN/NFV Application development Platform and Stack project. The key objectives for SNAPS are to make it much easier for NFV vendors to onboard their applications, provide transparent APIs for various kinds of infrastructure and reduce the complexity of integration testing.