Innovation

The Near Future. Diverse Thinkers Wanted: 10 Fun Facts

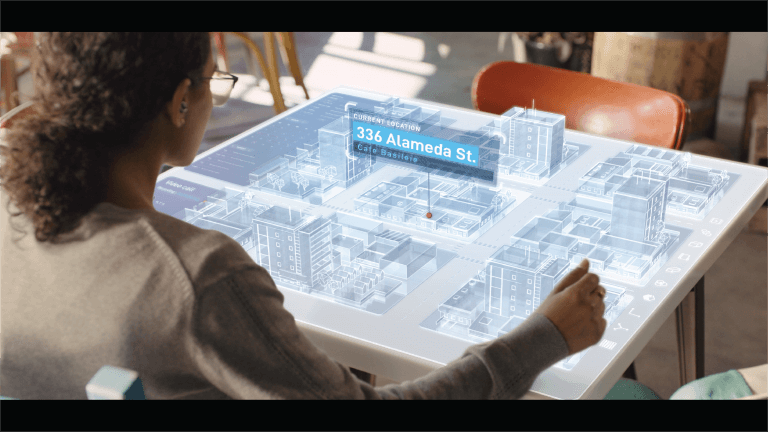

This week, at our Summer Conference, we released a short film titled The Near Future. Diverse Thinkers Wanted. The fourth installment in our Near Future series focusing on light field technology, mixed reality and AI, the film highlights how our broadband networks and increased connectivity keeps everyone in the workplace seamlessly connected and more creative. Here are ten fun facts about our film:

- The autonomous cars in the film appear to have no steering wheel. This was achieved by using real cars with steering wheels and producing carefully mirrored shots: the set, costumes, props and stage direction were all mirrored, and the shot was then flipped in post-production, creating a realistic autonomous car driver-side with no steering wheel.

- The lead actress ran so much in the film that she had to use two sets of shoes to avoid blisters. In shots that showed her feet, she used her costume’s business shoes; in other shots, she used running shoes.

- The opening chase scene from the café to the cars took more than 20 takes to get everything shot properly from every angle. Both actors were exhausted but happy to add a chase scene to their acting experience.

- The café in the film does not exist. Every table, chair, cup, painting and every other prop was brought into an empty retail space that was built (art designed) as a café. Two days after it was built, the whole thing was taken down, leaving only an empty retail space again.

- The holographic video content in the autonomous car assumes that the windshield glass works with the dashboard element to generate the media. The producers initially thought that glass light field technology was “too sci-fi,” but it passed due to the availability of existing glass displays.

- One day of shooting happened at a college, and parking had to be coordinated on narrow campus grounds. While one of the red “autonomous” cars was being parked, it hit a concrete corner of an outdoor seating area, which ripped through the metal of the car’s passenger side door. Nobody was hurt, and thankfully the car scene had already been shot.

- The set for the quadriplegic was actually an office kitchen that was converted into a home space. Every item in the office kitchen was taken out, and every prop—including tables and chairs in the background, and item on the wall of the set—was brought in and designed to look like a home. After shooting, it was all torn down and the office kitchen was put back together exactly as it was.

- Several quadriplegics were auditioned for the part, but the actor who got the part is not disabled. He said that being able to move only his eyes and face was one of the hardest acting challenges he’s ever had.

- The Holo-Room was designed and mostly constructed beforehand. It was designed to be moved piece by piece into an office space for quick construction. It was moved in and built in 1 day and then torn down.

- The film was shot entirely in San Diego, marking the first time a Near Future film had no scenes filmed in the Bay Area.

Events

The Future of Extended Reality

The Boulder International Film Festival (BIFF) has become one of the most influential and innovative film festivals in the United States. It might seem strange that CableLabs, a core technology company, was invited to participate in the festival’s creative conversations about the future of narrative media. Technology and the arts have always fed off of each other, but every once in a while, a generational disruption defines a new paradigm for both of them.

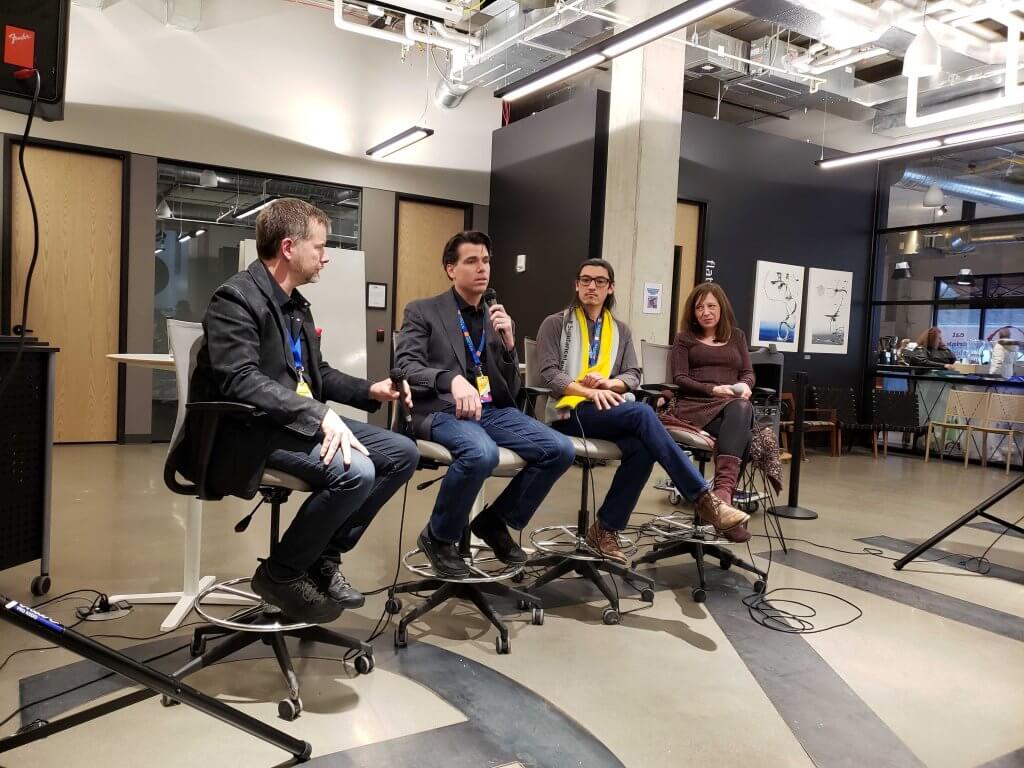

XR Panel

The festival’s panel on extended reality (XR) experience was hosted by Mark Read, founder of Hypercube, and I was honored to share the stage with Jeff Orlowski, Emmy-winning filmmaker, and Brenda Lee, founder, and CEO of Reality Garage. The panel discussed XR as a term that identifies how real-and-virtual environments are combined, whether it be mostly virtual as in virtual reality (VR), or more combined as in mixed reality, and the ever-improving technologies that make immersive media environments possible and how they can be experienced.

Discussions explored current immersive technologies, such as 360VR (looking in all directions in a static position), 6 Degrees of Freedom or 6DoF (looking and moving about in all directions and positions), augmented and mixed reality (looking through glasses to see digital media interacting with physical surroundings), and light field holographic displays (looking at true 3D volumetric media without glasses), as well as artificial intelligence (AI) and how it might be used within these environments.

The Importance of Narratives

The panel dove into what’s currently working and not working with XR, especially concerning narratives. One theme, consistent across the panel, was that immersive experiences feel much more real. Seeing a lion in VR gives the user a much more tangible experience of the animal compared with viewing it on a flat screen. But the novelty of these experiences tends to be short-lived, partly because the headsets required for them are still too bulky and off-putting, but mostly because the narrative quality of today’s titles are not compelling enough to move the needle on mass consumer adoption.

Millions of people love to watch The Lion King. It doesn’t matter that the lions aren’t real, or that the audience can’t walk around a scene. The quality of the narrative experience is what consumers demand, and that quality comes from over a century of evolution of how to tell compelling visual stories in the cinematic arts.

Games are the same way: Using 6DoF to move around inside of a game is not what causes gamers to return to their favorite titles. The 6DoF experience is compelling for a little while, but for most people, the flat screen comes back after the novelty of the XR has passed.

New Paradigm

For XR today, there’s a big gap between what the technology can do versus what consumers will pay for, but it was unanimous that this is temporary. The clunky headsets, the limited environments and haptics (physical feedback), the dumb characters and dialogue are all just stepping stones toward smarter and more compelling immersive systems.

The field of XR, as a narrative craft, is in its infancy and requires a new creative paradigm to move forward. XR can be compared with the sophistication of the first film 120 years ago, when an edit had not yet been conceived, much less the use of different camera angles. In the gaming industry, XR is comparable to Atari’s Pong, before the 2-D joystick control made more innovative games possible. What set of technologies and content will move the needle of XR forward to mass consumer adoption?

This is a new era in the digital media ecosystem. Gaming was the first content evolution from passive television which led to the interactive explosion of gaming today, and now there’s another kind of experience on its way. The new paradigm of the consumer experience is moving from controlling the content to being with the content.

What CableLabs is Doing

These ideas are not science fiction: CableLabs is working with a diverse group of industry leaders to standardize the format for network delivery of immersive media. This includes developing interoperable interfaces and exchange formats that will be shared by the ecosystem of capture-and-display manufacturers, network providers and content creators. With the capacity of the 10G network, extended reality will scale to hundreds of millions of consumers across the US.

What does an XR industry ecosystem look like at mass consumer adoption? This is the question that’s getting the attention of the cable industry, and it’s why we’re working directly with creatives as well as everybody else in the ecosystem: to create the future.

Innovation

10 Fun Facts about our Film The Near Future. Ready for Anything.

"Ideas without execution are a hobby, and true innovators are not in the hobby business." -- Phil McKinney

This week at our Summer Conference we released a short a film titled The Near Future. Ready for Anything. The third in our Near Future series focusing on virtual reality and AI, the film highlights how our broadband networks and increased connectivity will play a crucial role in the innovations of the future in the field of education.

Here we outline 10 fun facts about our film:

- To cast the lead role, a casting agency started with 50 young women. The producers listened to readings from the top 25 and Callbacks were done for the top 10, 5, 3, 2 and finally, the star of the film, Violet Hicks, was chosen for the role of Millie.

- The video-wall scene was created by constructing two separate classrooms next to each other with a large glass pane for the shared wall. After the scene was shot, both classrooms were torn down, including carpet, walls, and window tint, all in the same day.

- The rainbow cut-outs on the school wall were actual school art projects. All other classroom items on the walls, including the desks and chairs were ordered from a props company specifically for the film.

- Cookie the robot from The Near Future. A Better Place. makes a cameo appearance in the background of one of the classrooms.

- To get the AI Agent to appear to float on the moon, the actress stood on a green painted Lazy Susan-like disk, and two technicians manually spun her around on it as she was being filmed. The green disk was removed in post-production and replaced with visual effects, and the actress was shrunk down to the appropriate size for the scene.

- The name, Dot, is a take on the first smart watch, Spot, from 2004.

- The garden scene was shot at an elementary school in Mill Valley where gardening is a part of the curriculum.

- The Light Field scene at the end required a very specific office layout. Several offices were scouted over several weeks and one was finally found in a high-rise in downtown San Francisco.

- That same Light Field scene took about 20 takes to get all four actors to choreograph their parts properly, on top of having no reference to the holodeck media they should be seeing.

- Prior to filming The Near Future. Ready for Anything., the film’s camera technician had just finished work on Jurassic World, and has worked on every film in that series since the first Jurassic Park in 1999. The amount of green screen work and visual effects in the film required his expertise.

Now, sit back, relax and see if you can spot all 10 in our video:

You can learn more about the integral role the cable industry is playing in the innovations of the #nearfuture by clicking below.

Video

The Near Future of AI Media

CableLabs is developing artificial intelligence (AI) technologies that are helping pave the way to an emerging paradigm of media experiences. We’re identifying architecture and compute demands that will need to be handled in order to deliver these kinds of experiences, with no buffering, over the network.

The Emerging AI Media Paradigm

Aristotle ranks story as the second-best way to arrive at empirical truth and philosophical debate as the first. Since the days of ancient oral tradition, storytelling practices have evolved, and in recent history, technology has brought story to the platforms of cinema and gaming. Although the methods to create story differ on each platform, the fact that story requires an audience has always kept it separate from the domain of Aristotle’s philosophical debate…until now.

The screen and stage are observational: they’re by nature something separate and removed from causal reality. For games, the same principle applies: like a child observing an anthill, the child can use a stick to poke and prod at the ants to observe what they will do by his interaction. But the experience remains observational, and the practices that have made gaming work until now have been built upon that principle.

To address how AI is changing story, it’s important to understand that the observational experience has been mostly removed with platforms using six degrees of freedom (6DoF). Creating VR content with observational methods is ultimately a letdown: the user is present and active within the VR system, and the observational methods don’t measure up to what the user’s freedom of movement, or agency, is calling for. It’s like putting a child in a playground with a stick to poke and prod at it.

AI story is governed by methods of experience, not observation. This paradigm shift requires looking at actual human experience, rather than the narrative techniques that have governed traditional story platforms.

Why VR Story is Failing

The HBO series, Westworld, is about an AI-driven theme park with hundreds of robot ‘Hosts’ that are indistinguishable from real people. Humans are invited to indulge their every urge, killing and desecrating the agents as they wish since none of their actions have any real-world consequence. What Westworld is missing, despite a compelling argument from its writers, is that in reality, humans are fragile, complex social beings rather than sociopaths, and when given a choice, becoming more primal and brutal is not a trajectory for a better human experience. However, what is real about Westworld, is the mythic lessons that the story handles: the seduction of technology, the risk of humans playing God, and overcoming controlling authority to name a few.

This is the same experiential principal in regards to superheroes or any kind of fictional action figure: being invincible or having superhuman powers is not a part of authentic human becoming. These devices work well for teaching about dormant psychological forces, but not for the actual assimilation of them, and this is where the confusion around VR story begins.

Producing narrative experiences for 6DoF, including social robotics, means handling real human experience, and that means understanding a person’s actual emotion, behavior, and perception. Truth is being arrived at not by observing a story, but rather, truth is being assimilated by experience.

A New Era for Story

The most famous scene in Star Wars is when Vader is revealed as Luke’s father. Luke makes the decision to commit suicide rather than join the dark side with his father. Luke miraculously survives his fall into a dark void, is rescued by Leia, and ultimately emerges anew. The audience naturally understands the evil archetype of Vader, the good archetype of Obi-Wan, and Luke’s epic sacrifice of choosing principle over self in this pivotal scene. The archetypes and the sacrifice are real, and that’s why they work for Star Wars or for any story and its characters, but Jedi’s are not real. Actually training to become a Jedi is delusional, but the archetype of a master warrior in any social domain is very real.

And this is where it gets exciting.

Story, it turns out, was not originally intended for passive entertainment, rather it was a way to guide and teach about the actual experience. The concept of archetypes goes back to Plato, but Carl Jung and Joseph Campbell made them popular, and it was Hollywood that adopted them for character development and screenwriting. According to Jung, archetypes are primordial energies of the unconscious that move into the conscious. In order for a person to grow and build a healthy psyche, he or she must assimilate the archetype that’s showing up in their experience.

The assimilation of archetypes is a real experience, and according to Campbell, the process of assimilation is when a person feels most fully alive. Movies, being observed by the audience, are like a mental exercise for how a person might go about that assimilation. If story is to scale on the platforms of VR, MR (mixed reality) and social robotics, the user must experience archetypal assimilation to a degree of reality that the flat screen cannot achieve.

But how? The scope of producing for user archetypes is massive. To really consider doing that means going full circle back to Aristotle’s philosophy and it’s offspring disciplines of psychology, theology, and the sciences - all of which have their own thought leaders, debates, and agreements on what truth by experience really means. The future paradigm of AI story, it seems, includes returning to the Socratic method of questioning, probing, and arguing in order to build critical thinking skills and self-awareness in the user. If AI can be used to achieve a greater reality in that experience, then this is the beginning of a technology that could turn computer-human interaction into an exciting adventure into the self.

You can learn more about what CableLabs is working on by watching my demonstration, in partnership with USC's Institute for Creative Technologies, on AI agents.

Eric Klassen is an Executive Producer and Innovation Project Engineer at CableLabs. You can read more about storytelling and VR in his article in Multichannel News, "Active Story: How Virtual Reality is Changing Narratives." Subscribe to our blog to keep current on our work in AI.

Consumer

A Look into the Near Future

CableLabs has done something surprising for an Innovation and R&D Lab. They have released a short film that provides a vision of possibilities arising from the high speed low latency networks that will connect our homes, businesses and mobile devices in the not too far distant future.

Portraying a number of vignettes in the life of a family, the video illustrates the impact of new technologies on a range of human interactions. These include holographic based education, autonomous vehicles, augmented and virtual reality gaming, collaborative work and much more.

The film, produced from the vision of the CableLabs innovation team, offers a compelling view into the technology driven transformative shifts that could occur over the next five years.

Filming was not a trivial task. To properly illustrate each technology, special effects were required in most of the shots. The visual FX company, FirstPerson, was brought on to the team to 3D map each set in preparation for post production. After shooting was completed, the FX team was tasked with matching the 3D generated maps to the real locations, bringing the virtual reality technology to life.

To see the film and more, go to www.thenearfuture.network

Video

Active Story: How Virtual Reality is Changing Narratives

I love story, it’s why I got into filmmaking. I love the archetypal form of the written story, the many compositional techniques to achieve a visual story, and using layers of association to tell story with sound. But most of all, I love story because of its capacity to teach. Telling a story to teach an idea, it has been argued, is the most fundamental technology for social progress.

When the first filmmakers were experimenting with shooting a story, they couldn’t foresee using the many creative camera angles that are used today: the films more resembled watching a play. After decades of experimentation, filmmakers discovered more and more about what they could do with the camera, lighting, editing, sound, and special effects, and how a story could be better told as a result. Through much trial and error, film matured into its own discipline.

Like film over a century ago, VR is facing unknown territory and needs creative vision balanced with hard testing. If VR is to be used as a platform for story, it’s going to have to overcome some major problems. As a recent cover story from Time reported, gaming and cinema story practices don’t work in virtual reality.

VR technology is beckoning storytellers to evolve, but there’s one fundamental problem standing in the way: the audience is now in control of the camera. When the viewer is given this power, as James Cameron points out, the experience becomes a gaming environment, which calls for a different method to create story.

Cameron argues that cinematic storytelling can’t work in VR: it’s simply a gaming environment with the goggles replacing the POV camera and joystick. He’s correct, and as long as the technology stays that way, VR story will be no different than gaming. But there’s an elephant in the room that Cameron is not considering: the VR wearable is smart. The wearable is much more than just a camera and joystick, it’s also a recording device for what the viewer is doing and experiencing. And this changes everything.

If storytelling is going to work in VR, creatives need to make a radical paradigm shift in how story is approached. VisionaryVR has come up with a partial solution to the problem, using smart zones in the VR world to guide viewing and controlling the play of content, but story is still in its old form. There’s not a compelling enough reason to move from the TV to the headset in this case. But they’re on the right track.

Enter artificial intelligence.

The ICT at USC has produced a prototype of a virtual conversation with the holocaust survivor, Pinchas Gutter (Video). In order to populate the AI, Pinchas was asked two thousand questions about his experiences. His answers were filmed on the ICT LightStage that also recorded a full 3D video of him. The final product, although not perfect, is the feeling of an authentic discussion with Pinchas about his experience in the holocaust. They’ve used a similar “virtual therapist” to treat PTSD soldiers, and when using the technology alongside real treatment, they’ve achieved an 80% recovery rate, which is incredible. In terms of AI applications, these examples are very compelling use cases.

The near future of VR will include character scripting using this natural language application. Viewers are no longer going to be watching characters, they are going to be interacting with them and talking to them. DreamWorks will script Shrek with five thousand answers about what it’s like when people make fun of him, and when kids use it, parents will be better informed about how to talk to their kids. Perhaps twenty leading minds will contribute to the natural languaging of a single character about a single topic. That’s an exciting proposition, but how does that help story work in VR?

Gathering speech, behavioral, and emotive analytics from the viewer, and applying AI responses to it, is an approach that turns story on its head. The producer, instead of controlling the story from beginning to end, follows the lead of the viewer. As the viewer reveals an area of interest, the media responds in a way that’s appropriate to that story. This might sound like gaming, but it’s different when dealing with authentic, human experience. Shooting zombies is entertaining on the couch, but in the VR world, the response is to flee. If VR story is going to succeed, it must be built on top of real world, genuine experience. Working out how to do this, I believe, is the paradigm shift of story that VR is calling for.

If this is the future for VR, it will change entirely how media is produced, and a new era of story will develop. I’m proposing the term, “Active Story,” as a name for this approach to VR narratives. With this blog, I’ll be expanding on this topic as VR is produced and tested at CableLabs and as our findings reveal new insight.

Eric Klassen is an Associate Media Engineer in the Client Application Technologies group at CableLabs.