Wireless

Moving Beyond Cloud Computing to Edge Computing

In the era of cloud computing—a predecessor of edge computing—we’re immersed with social networking sites, online content and other online services giving us access to data from anywhere at any time. However, next-generation applications focused on machine-to-machine interaction with concepts like internet of things (IoT), machine learning and artificial intelligence (AI) will transition the focus to “edge computing” which, in many ways, is the anti-cloud.

Edge computing is where we bring the power of cloud computing closer to the customer premises at the network edge to compute, analyze and make decisions in real time. The goal of moving closer to the network edge—that is, within miles of the customer premises—is to boost the performance of the network, enhance the reliability of services and reduce the cost of moving data computation to distant servers, thereby mitigating bandwidth and latency issues.

The Need for Edge Computing

The growth of the wireless industry and new technology implementations over the past two decades has seen a rapid migration from on-premise data centers to cloud servers. However, with the increasing number of Industrial Internet of Things (IIoT) applications and devices, performing computation at either data centers or cloud servers may not be an efficient approach. Cloud computing requires significant bandwidth to move the data from the customer premises to the cloud and back, further increasing latency. With stringent latency requirements for IIoT applications and devices requiring real-time computation, the computing capabilities need to be at the edge—closer to the source of data generation.

What Is Edge Computing?

The word “edge” precisely relates to the geographic distribution of network resources. Edge computation enables the ability to perform data computation close to the data source instead of going through multiple hops and relying on the cloud network to perform computing and relay the data back. Does this mean we don’t need the cloud network anymore? No, but it means that instead of data traversing through the cloud, the cloud is now closer to the source generating the data.

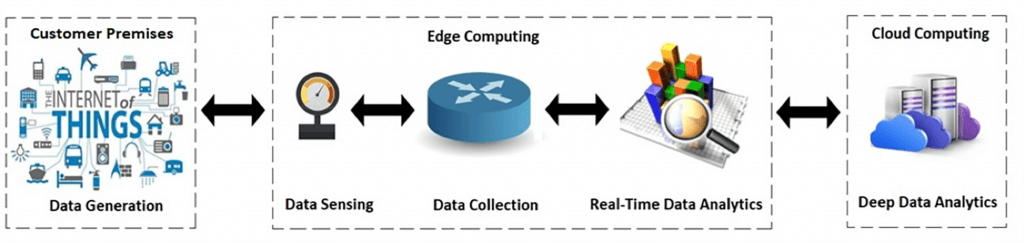

Edge computing refers to sensing, collecting and analyzing data at the source of data generation, and not necessarily at a centralized computing environment such as a data center. Edge computing uses digital devices, often placed at different locations, to transmit the data in real time or later to a central data repository. Edge computing is the ability to use distributed infrastructure as a shared resource, as the figure below shows.

Edge computing is an emerging technology that will play an important role in pushing the frontier of data computation to the logical extremes of a network.

Key Drivers of Edge Computing:

- Plummeting cost of computing elements

- Smart and intelligent computing abilities in IIoT devices

- A rise in the number of IIoT devices and ever-growing demand for data

- Technology enhancements with machine learning, artificial intelligence and analytics

Benefits of Edge Computing

Computational speed and real-time delivery are the most important features of edge computing, allowing data to be processed at the edge of network. The benefits of edge computing manifest in these areas:

-

Latency

Moving data computing to the edge reduces latency. Latency without edge computing—when data needs to be computed at a server located far from the customer premises—varies depending on available bandwidth and server location. With edge computing, data does not have to traverse over a network to a distant server or cloud for processing, which is ideal for situations where latencies of milliseconds can be untenable. With data computing performed at the network edge, the messaging between the distant server and edge devices is reduced, decreasing the delay in processing the data.

-

Bandwidth

Pushing processing to edge devices, instead of streaming data to the cloud for processing, decreases the need for high bandwidth while increasing response times. Bandwidth is a key and scarce resource, so decreasing network loading with higher bandwidth requirements can help with better spectrum utilization.

-

Security

From a certain perspective, edge computing provides better security because data does not traverse over a network, instead staying close to the edge devices where it is generated. The less data computed at servers located away from the source or cloud environments, the less the vulnerability. Another perspective is that edge computing is less secure because the edge devices themselves can be vulnerable, putting the onus on operators to provide high security on the edge devices.

What Is Multi-Access Edge Computing (MEC)?

MEC enables cloud computing at the edge of the cellular network with ultra-low latency. It allows running applications and processing data traffic closer to the cellular customer, reducing latency and network congestion. Computing data closer to the edge of the cellular network enables real-time analysis for providing time-sensitive response—essential across many industry sectors, including health care, telecommunications, finance and so on. Implementing distributed architectures and moving user plane traffic closer to the edge by supporting MEC use cases is an integral part of the 5G evolution.

Edge Computing Standardization

Various groups in the open source and standardization ecosystem are actively looking into ways to ensure interoperability and smooth integration of incorporating edge computing elements. These groups include:

- The Edge Computing Group

- CableLabs SNAPS programs, including SNAPS-Kubernetes and SNAPS-OpenStack

- OpenStack’s StarlingX

- Linux Foundation Networking’s OPNFV, ONAP

- Cloud Native Compute Foundation’s Kubernetes

- Linux Foundation’s Edge Organization

How Can Edge Computing Benefit Operators?

- Dynamic, real-time and fast data computing closer to edge devices

- Cost reduction with fewer cloud computational servers

- Spectral efficiency with lower latency

- Faster traffic delivery with increased quality of experience (QoE)

Conclusion

The adoption of edge computing has been rapid, with increases in IIoT applications and devices, thanks to myriad benefits in terms of latency, bandwidth and security. Although it’s ideal for IIoT, edge computing can help any applications that might benefit from latency reduction and efficient network utilization by minimizing the computational load on the network to carry the data back and forth.

Evolving wireless technology has enabled organizations to use faster and accurate data computing at the edge. Edge computing offers benefits to wireless operators by enabling faster decision making and lowering costs without the need for data to traverse through the cloud network. Edge computation enables wireless operators to place computing power and storage capabilities directly at the edge of the network. As 5G evolves and we move toward a connected ecosystem, wireless operators are challenged to maintain the status quo of operating 4G along with 5G enhancements such as edge computing, NFV and SDN. The success of edge computing cannot be predicted (the technology is still in its infancy), but the benefits might provide wireless operators with critical competitive advantage in the future.

How Can CableLabs Help?

CableLabs is a leading contributor to European Telecommunication Standards Institute NFV Industry Specification Group (ETSI NFV ISG). Our SNAPS™ program is part of Open Platform for NFV (OPNFV). We have written the OpenStack API abstraction library and contributed it to the OPNFV project at the Linux Foundation—“SNAPS-OO”—and leverage object oriented software development practices to automate and validate applications on OpenStack. We also added Kubernetes support with SNAPS-Kubernetes, introducing a Kubernetes stack to provide CableLabs members with open source software platforms. SNAPS-Kubernetes is a certified CNCF Kubernetes installer that is targeted at lightweight edge platforms and scalable with the ability to efficiently manage failovers and software updates. SNAPS-Kubernetes is optimized and tailored to address the need of the cable industry and general edge platforms. Edge computing on Kubernetes is emerging as a powerful way to share, distribute and manage data on a massive scale in ways that cloud, or on-premise deployments cannot necessarily provide.