Consumer

Can a Wi-Fi radio detect Duty Cycled LTE?

For my third blog I thought I’d give you preview of a side project I’ve been working on. The original question was pretty simple: Can I use a Wi-Fi radio to identify the presence of LTE?

Before we go into what I’m finding, let’s recap: We know LTE is coming into the unlicensed spectrum in one flavor or another. We know it is (at least initially) going to be a tool that only mobile network operators with licensed spectrum can use, as both LAA and LTE-U will be “license assisted” – locked to licensed spectrum. We know there are various views about how well it will coexist with Wi-Fi networks. In my last two blog posts (found here and here) I shared my views on the topic, while some quick Googling will find you a different view supported by Qualcomm, Ericsson, and even the 3GPP RAN Chairman. Recently the coexistence controversy even piqued the interest of the FCC who opened a Public Notice on the topic that spawned a plethora of good bedtime reading.

One surefire way to settle the debate is to measure the effect of LTE on Wi-Fi, in real deployments, once they occur. However to do that, you must have a tool that can measure the impact on Wi-Fi. You’d also need a baseline, a first measurement of Wi-Fi only performance in the wild to use as a reference.

So let’s begin by considering this basic question: using only a Wi-Fi radio, what would you measure when looking for LTE? What Key Performance Indicators (KPIs) would you expect to show you that LTE was having an impact? After all, to a Wi-Fi radio LTE just looks like loud informationless noise, so you can’t just ask the radio “Do you see LTE?” and expect an answer. (Though that would be super handy.)

To answer these questions, I teamed up with the Wi-Fi performance and KPI experts at 7Signal to see if we could use their Eye product to detect, and better yet, quantify the impact of a co-channel LTE signal on Wi-Fi performance.

Our first tests were in the CableLabs anechoic chamber. This chamber is a quiet RF environment used for very controlled and precise wireless testing. The chamber afforded us a controlled environment to make sure we’d be able to see any “signal” or difference in the KPIs produced by the 7Signal system with and without LTE. After we confirmed that we could see the impact of LTE on a number of KPIs, we moved to a less controlled, but more real world environment.

Over the past week I’ve unleashed duty cycled LTE at 5GHz (a la LTE-U) on the CableLabs office Wi-Fi network. To ensure the user/traffic load and KPI sample noise was as real world as possible… I didn’t warn anybody. (Sorry guys! That slow/weird Wi-Fi this week was my fault!)

In the area tested, our office has about 20 cubes and a break area with the majority of users sharing the nearest AP. On average throughout the testing we saw ~25 clients associated to the AP.

We placed the LTE signal source ~3m from the AP. We chose two duty cycles, 50% of 80ms and 50% of 200ms, and always shared a channel with a single Wi-Fi access point within energy detection range. We also tested two power levels, -40 dBm and -65dBm at the AP so we could test with LTE power above and just below the Wi-Fi LBT energy detection threshold of -62dBm.

We will have more analysis and results later, but I just couldn’t help but share some preliminary findings. The impact to many KPIs is obvious and 7Signal does a great job of clearly displaying the data. Below are a couple of screen grabs from our 7Signal GUI.

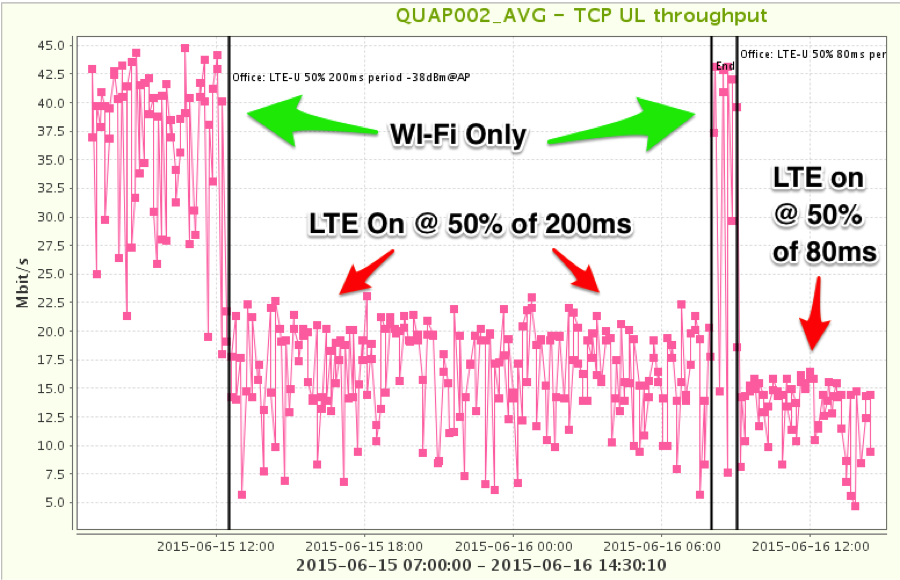

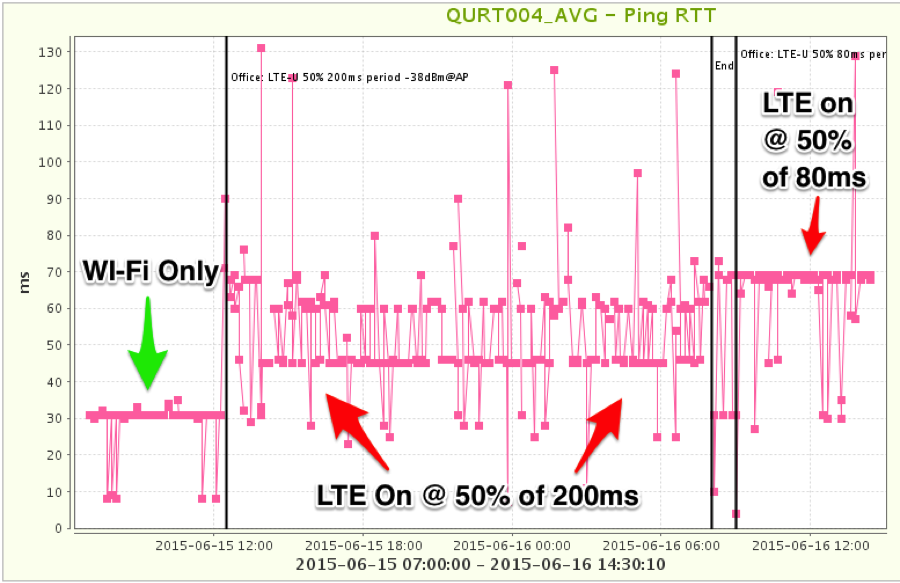

The first two plots show the tried and true throughput and latency. These are the most obvious and likely KPIs to show the impact and sure enough the impact is clear.

Figure 1 - Wi-Fi Throughput Impact from Duty Cycle LTE

Figure 2 - Wi-Fi Latency Impact from Duty Cycle LTE

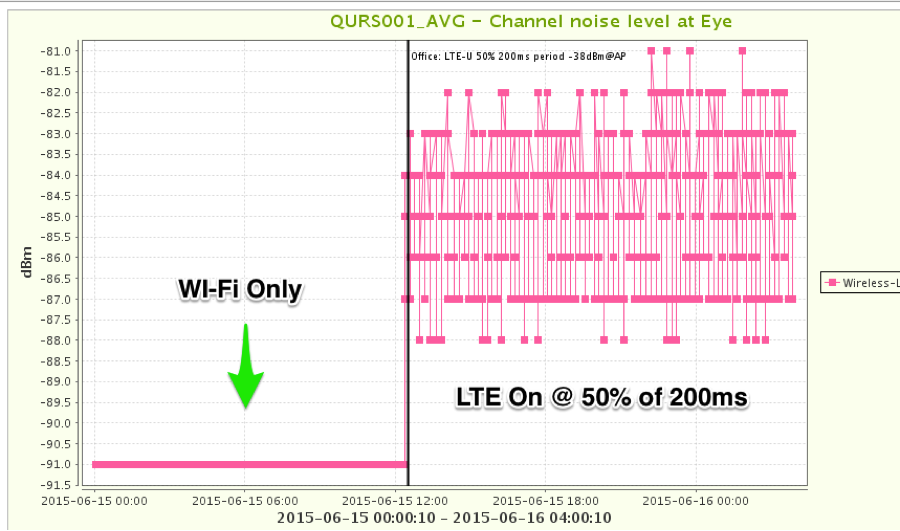

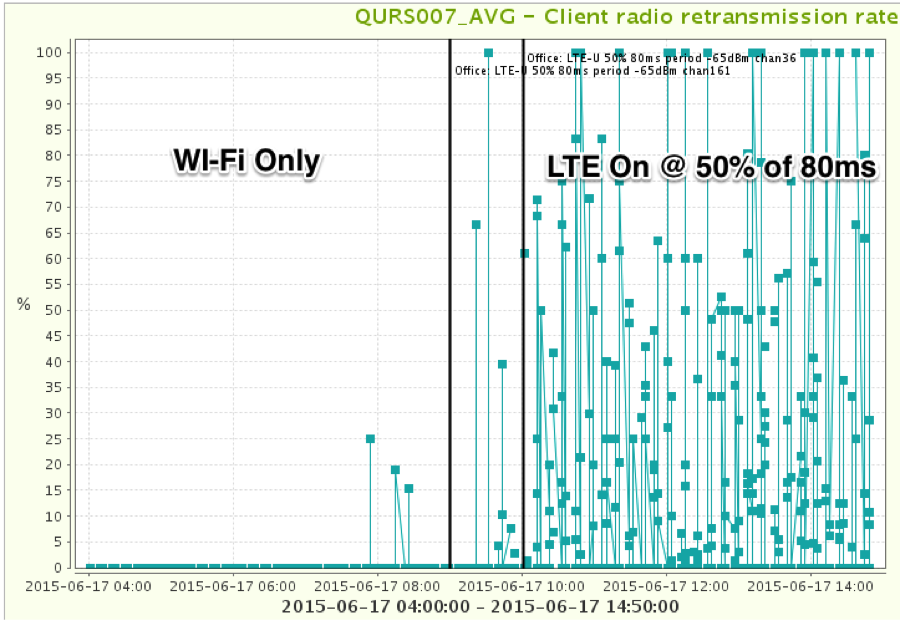

We were able to discern a clear LTE “signal” from many other KPIs. Some notable examples were channel noise floor and the rate of client retransmissions. Channel noise floor is pretty self-explanatory. Retransmissions occur when either the receiver was unable to successfully receive a frame or was blocked from sending the ACK frame back to the transmitter. The ACK frame, or acknowledgement frame, is used to tell the sender the receiver got the frame successfully. The retransmission plot shows the ratio of retransmitted frames over totaled captured frames in a 10 second sample.

As a side note: Our findings point to a real problem when the LTE power level at an AP is just below the Wi-Fi energy detection threshold. These findings are similar to those found in the recent Google authored white paper attached as Appendix A to their FCC filing.

Figure 3 - Channel Noise Floor Impact from Duty Cycle LTE

Figure 4 - Wi-Fi Client Retransmission Rate Impact of Duty Cycle LTE

Numerous other KPIs showed the impact but require more post processing of the data and/or explanation so I’ll save that for later.

In addition to the above plots, I have a couple of anecdotal results to share. First our Wi-Fi controller was continuously changing the channel on us making testing a bit tricky. I guess it didn’t like the LTE much. Also, we had a handful CableLabs employees figure out what was happening and say “Oh! That’s why my Wi-Fi has been acting up!” followed by various defamatory comments about me and my methods.

Hopefully all LTE flavors coming to the unlicensed bands will get to a point where coexistence is assured and such measures won’t be necessary. If not — and we don’t appear to be there yet — it is looking pretty clear that we can detect and measure the impact of LTE on Wi-Fi in the wild if need be. But again, with continued efforts by the Wi-Fi community to help develop fair sharing technologies for LTE to use, it won’t come to that.